Quick Summary: Open-source AI models revolutionize 3D creation, making professional-grade assets accessible to everyone. Tools like Meshy AI, Point-E, and Stable Diffusion 3D generate game-ready models from text prompts instantly, once requiring costly software. Now, anyone with a GPU can build entire worlds, democratizing 3D design beyond studios.

3D image generation has exploded in recent years. Open-source AI models democratize access to technologies that once were expensive commercial software. Developers, researchers and creators can now develop sophisticated 3D content without breaking the bank. They don’t need to wait for corporate gatekeepers to decide what features they need.

Here’s the thing: we’re not talking about basic polygon pushers or simple mesh generators. These AI models for 3D image creation represent a fundamental shift in how we approach three-dimensional content creation. They understand geometry, lighting, materials, and spatial relationships. Traditional 3D software could never achieve this through manual processes alone.

Open-source 3D image generation AI offers accessibility and flexibility. You can modify these models and integrate them into existing workflows. You can contribute improvements back to the community. This collaborative approach has accelerated innovation far beyond what any single company could achieve working in isolation.

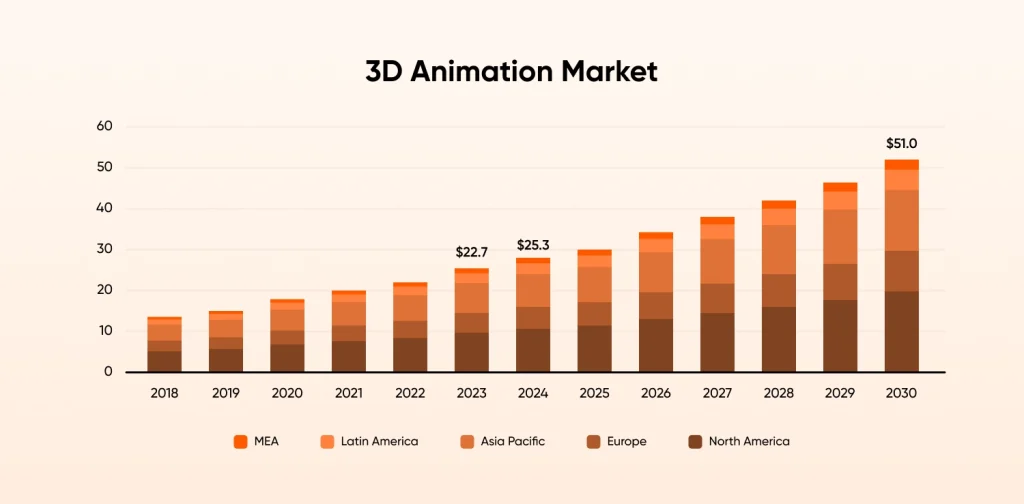

According to Grand View Research, the global 3D animation market will reach a 12.3% CAGR (compound annual growth rate) from 2023 to 2030. This growth drives demand for accessible 3D generation tools.

How 3D Image Generation with AI Works?

3D diffusion models represent the true backbone of modern AI-driven 3D content creation. These kinds of systems work by understanding complex mathematical relationships between 2D images and their corresponding 3D structures. The process begins with training on massive datasets of 3D objects paired with multiple viewing angles. This teaches the AI to find patterns in how shapes, shadows, and surfaces behave in three-dimensional space.

The magic happens through volume rendering and neural radiance fields (NeRFs).

Instead of traditional polygon-based modeling, these AI 3D model generators create volumetric representations of objects. Think of it as teaching a computer to understand how light travels through and around objects. This creates photorealistic renders from any angle. Generative AI for 3D takes this concept further by enabling creation from text prompts or single images.

The AI doesn’t just reconstruct existing 3D data, but it also generates new data. Based on learning patterns, it can generate entirely new three-dimensional content based on new learned patterns. This approach allows unprecedented creative freedom while maintaining mathematical accuracy in the underlying geometry.

The computational requirements are significant but manageable. Most modern GPUs can handle inference for these models. Training requires more substantial hardware. The key breakthrough has been optimizing these algorithms to run efficiently on consumer-grade equipment using various AI programming languages like Python and CUDA. This makes professional-quality 3D generation accessible to individual creators and small teams.

Best Open Source AI Models to Generate 3D Images

1. Stable Diffusion 3D

Stable Diffusion 3D extends the popular 2D image generation model into three-dimensional space. This AI for 3D rendering approach leverages extensive training data from Stable Diffusion’s 2D model. It understands how objects should appear in 3D space. The model generates multi-view images that can be reconstructed into 3D models.

Latest creative tools allow users to design 3D visuals. And the main strengths are in style adaptation and imaginative design options. The new technology can generate 3D models from image, which opens up many possibilities for more creative work.

Key Benefits:

- Style adaptation abilities for distinctive visual results

- Community-driven development with regular updates and improvements

- Extensive tailoring options through LoRA and fine-tuning

- Open-source accessibility with no licensing restrictions

- It has low computing needs compared to other high-quality models

2. Point-E by OpenAI

Point-E takes a different approach by generating 3D point clouds from text descriptions. This model is designed for efficient speed and efficiency. It produces 3D representations in seconds rather than minutes. The two-stage process first generates a synthetic view of the object. Then it produces a point cloud that matches that view.

The strength of Point-E lies in its practical applications. Point clouds are used in autonomous vehicles, robotics and AR/VR applications widely. Companies requiring AI model fine-tuning development services, choose Point-E. The strength of Point-E lies in its practical applications. Its output makes for testing ideas and design work.

Key Benefits:

- Self-directed mapping and navigation can be done with robotic integration

- Lightweight design requiring minimal GPU memory

- Lightning-fast generation with results in under 30 seconds

- AR/VR compatibility with native point cloud support

- Scalable deployment suitable for edge computing devices

3. DreamFusion by Google Research

DreamFusion shows a transformative approach to text-to-3D synthesis, which was developed by Google Research in 2022. This AI for 3D model generation leverages the power of 2D diffusion models to create detailed three-dimensional objects from simple text descriptions. The system uses a text-to-image generative model called Imagen to optimize a 3D scene through a novel technique called Score Distillation Sampling (SDS)

The breakthrough lies in its clever workaround for the lack of large-scale 3D training data. The approach here is to avoid limitations by using a pretrained 2D text-to-image diffusion model to perform text-to-3D synthesis. This innovation allows the system to understand 3D structure from 2D knowledge.

Key Benefits:

- SDS technique allows 3D generation without 3D training data

- Neural Radiance Fields (NeRF) integration for photorealistic 3D rendering

- Text-driven creativity allowing complex scene descriptions and object generation

- Research foundation with Google’s backing and extensive academic validation

- Community implementations available through open-source alternatives like Stable DreamFusion

Bonus Free AI Models for 3D Image Generation

4. Meshy AI

Meshy AI is a free platform for generating 3D models from either images or prompts. It combines multiple AI techniques to create detailed 3D meshes with realistic textures and materials. It is powerful because it understands complex geometric relationships. Meshy AI generates models that maintain structural integrity across different viewing angles.

The platform uses advanced neural networks trained on diverse 3D datasets. This allows it to handle everything from organic creatures to architectural elements. Meshy AI excels in building game-ready assets and gives multiple output formats. These formats work perfectly with a popular 3D software. For all organisations working with AI software development companies, Meshy provides API integration and customization options.

Key Benefits:

- Multiple export formats are provided like FBX, OBJ, and lTF

- Game-ready asset generation with proper topology and UV mapping

- API integration for smooth workflow automation

- Batch processing capabilities for large-scale content creation

- Texture synthesis with PBR material support

5. DALL-E 3 with 3D Extensions

DALL-E 3 is mainly used for making 2D images, but new features now let it handle 3D as well. This works by showing the object from many angles and using depth information. With its strong knowledge of objects and scenes, DALL-E 3 does well at making creative 3D shapes with high artistic quality. Being part of OpenAI’s tools makes it even more useful.

This is especially true for developers already working with GPT models. Developers teams planning to create AI agents using GPT integration, find DALL-E 3’s 3D extensions useful. They generate visual content that complements conversational AI.

Key Benefits:

- Multi-view consistency, making sure coherent 3D representations

- GPT ecosystem integration for seamless AI workflow automation

- High artistic quality with superior understanding of complex concepts

- Concept visualization for abstract ideas and creative projects

- Enterprise API support with robust scaling capabilities

6. Hyper3D

Hyper3D is a new generation technology for 3D AI image generator. Its main focus is on real-time generation and interactive editing.This model uses advanced neural architectures to generate 3D content that can be modified and refined in real-time. The approach combines generative modelling with interactive editing tools.

The model’s main strength is its fast update features and easy-to-use layout. Users can change the made models right away. They see updates instantly in the 3D view. For organizations exploring AI programming languages and real-time applications, Hyper3D offers excellent scalability options and performance optimization.

Key Benefits:

- It instantly view updates while editing designs in real time

- Build 3D models easily with tools that feel natural to use

- Runs smoothly for a better and faster experience

- Lets multiple people build and edit 3D projects together

- Works well on both phones and computers without issues

Comparative Table of AI Models for 3D Image Generation

| Model | Main Output Format(s) | Key Benefits | Unique Differentiators |

| Stable Diffusion 3D | Multi-view Images for 3D |

|

|

| Point-E (OpenAI) | 3D point clouds |

|

|

| DreamFusion (Google Research) | Neural Radiance Fields (NeRF) |

|

|

| Meshy AI | OBJ, FBX, glTF |

|

|

| DALL-E 3 (3D Extensions) | Multi-view 3D images |

|

|

| Hyper3D | Real-time 3D mesh/images |

|

|

How to Choose the Right AI Tool for 3D Image Generation?

Step-1 Identify Your Primary Use Case

The choice between these kind of open-source AI models depends on your specific requirements. If you need rapid prototyping and don’t mind working with point clouds, Point-E provides unmatched speed. For game development and interactive applications, Meshy AI gives comprehensive asset generation capabilities.

Take your target output format into consideration. Teams working with traditional 3D software will benefit from models that produce standard mesh formats. If you’re building an AR/VR application, point cloud outputs might be more suitable. The decision comes down to whether, you prioritize quality, speed, or any specific format requirements.

Step-2 Evaluate Technical Requirements

Hardware constraints play an important role in model selections. Stable Diffusion 3D and DALL-E extensions require substantial GPU memory and processing power. Point-E can run on modest hardware configurations. For organizations planning to build and train AI model customizations, consider the computational resources. You need these for both inference and training.

Integration complexity varies significantly between models. Some require extensive preprocessing pipelines. Others offer simple API interfaces. Teams with existing AI infrastructure should evaluate how easily each model can be incorporated. Consider whether additional AI model fine-tuning capabilities are needed.

Step-3 Consider Long-term Maintenance

Model updates and community support differ substantially between options. Open-source models like Stable Diffusion 3D have extensive community backing and regular new updates. Commercial platforms like Meshy AI give enterprise-grade support but may have usage restrictions. The ability to customize models becomes essential for specialized applications.

Some models offer extensive AI model fine-tuning capabilities. Others work best with their pre-trained weights. Consider whether your use case requires model tailoring. Determine if standard outputs meet your needs. The choice often impacts long-term scalability and maintenance requirements.

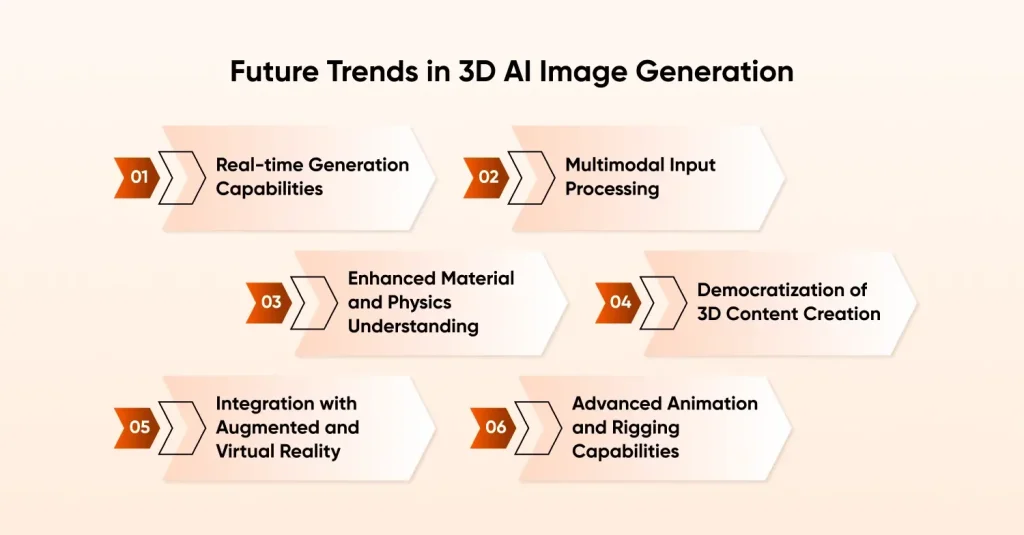

Future Trends in 3D AI Image Generation

Real-time Generation Capabilities

The next pioneering area involves AI-generated 3D models from image processing in real-time scenarios. Current models like Hyper3D are already pushing boundaries with interactive generation. We expect even faster capabilities. This development will enable live content creation, interactive design tools, and immersive creation experiences.

Neural network optimization techniques are reducing computational requirements while maintaining output quality. Techniques like quantization, pruning, and knowledge distillation are making these models more efficient. The goal is to achieve real-time generation on mobile devices and embedded systems.

Multimodal Input Processing

Future models will smoothly integrate images, text, sketches and even audio inputs to generate 3D content. This multimodal method will make 3D creation more intuitive and easily accessible to non-technical users. Businesses who hire AI developers will benefit by offering users the ability to describe an object verbally while sketching rough shapes, which will revolutionize design workflows.

Enhanced Material and Physics Understanding

Next-generation models will understand material properties, environmental interactions, and physics simulations. This advancement will enable generation of 3D objects that not only look realistic but behave realistically. They will function properly in simulated environments. Integration with physics engines will create more believable virtual worlds.

Democratization of 3D Content Creation

The competitive barrier to entry for 3D content creation continues to drop, future models will feature simplified interfaces that require no technical expertise. This makes 3D generation accessible to designers, artists and content creators without programming backgrounds. This democratization will lead to an explosion of creative applications.

Integration with Augmented and Virtual Reality

Direct integration with AR/VR platforms will allow users to generate and make changes in 3D content within immersive environments. This capability will transform, how we approach spatial design, inetractive storytelling, and architectural visualization. Real-time generation within VR spaces will become standard practice.

Advanced Animation and Rigging Capabilities

Future models will generate not just static 3D objects but a complete animated characters. These will include skeletal systems and proper rigging. This advancement will bridge the gap between 3D generation and animation. It allows rapid prototyping of games, interactive media and animated content for films.

Tips and Best Practices for 3D Image Generation

Optimize Your Prompts for Better Results

Successful 3D image generation depends on prompt engineering. Specific, detailed descriptions give better results than vague requests. Include information about lighting conditions, materials and viewing angles. For example, “metallic robot with reflective chrome finish in studio lighting” generates more consistent results than simply “robot.”

Experiment with different types of prompt structures and keywords. Some models respond better to artistic terminology. Others prefer technical descriptions for common object types.

Preprocessing Input Images

When using the image to 3D capabilities, the image quality impacts the output quality. High-resolution images with a good lighting and clear subject definition produce superior results. Eliminate the backgrounds when possible and make sure the subject is well-lit from multiple angles.

Consider the limitations of single-view reconstruction. Models make assumptions about different hidden surfaces. Providing multiple viewpoints and detailed descriptions that will help in guiding the generation process. The goal is to give AI sufficient information to make accurate inferences about unseen portions of the object.

Post-processing and Refinement

Generated 3D models require refinement in traditional 3D software. Texture optimization, mesh cleanup and topology improvements are common post-processing steps. Understanding these workflows helps you in choosing models that produce outputs compatible with your existing pipeline.

Plan for iteration cycles. Initial generations rarely meet final quality requirements. Budget time for refinement and regeneration. The most successful projects combine AI generation with traditional 3D techniques. Use AI for rapid prototyping and human expertise for final polish.

Hardware Optimization and Resource Management

Understanding your hardware limitations is important for efficient 3D AI generation. Different models have different memory requirements. Some need 8GB+ of VRAM while others work with 4GB. Monitor GPU utilization and adjust as per batch sizes accordingly to prevent out-of-memory errors.

Consider using cloud-based solutions for heavy computational tasks. Services like AWS, Google Collab or dedicated AI platforms can give access to high-end GPUs. This method is particularly valuable for teams testing multiple models of handling large-scale generation tasks where you need to build train AI model configurations from scratch.

Version Control and Asset Management

Implement proper version control for your 3D assets and generation parameters. Keep track of successful prompt combinations, model settings, and post-processing workflows. This documentation becomes invaluable, when reproducing specific results or scaling projects.

Organize generated assets with precise and clear naming conventions and metadata. It includes information about the parameters used, generating models and any post-processing applied. This organization helps maintain consistency across projects and allows asset reuse.

Quality Assurance and Testing Protocols

Establish necessary testing protocols for generated 3D content. Check for common issues like mesh integrity, topology problems and texture alignment. Automated testing tools can help in finding structural issues before manual reviews. Hire QA engineers to save time in the quality assurance process.

Test generated models in target environments early in the process. Whether your models are destined for games, VR applications, or 3D printing, early testing reveals format compatibility issues. It also shows performance constraints that might require adjustments to your generation pipeline.

Why CMARIX: Your Strategic Ally in 3D AI Innovation?

CMARIX understands that 3D AI generation represents the next frontier in digital content creation. As businesses increasingly demand immersive experiences, having a technology specialist who comprehends both the potential and complexities of these emerging tools becomes critical.

Our expertise in AI model fine-tuning development services positions us uniquely to help companies integrate these open-source 3D models into

their existing workflows. We don’t just implement off-the-shelf solutions; we customize and optimize these models for specific business requirements.

Whether, you need to adapt Meshy AI for your game development pipeline or optimize Point-E for your AR applications, CMARIX provides a technical depth to make it happen. We bridge the gap between leading edge AI research and practical business applications.

Conclusion

The landscape of open-source AI models for 3D image generation has matured rapidly. It offers creators unprecedented access to professional-quality tools. These five models represent different approaches to the same fundamental challenge. They transform human creativity into a three-dimensional digital reality. This moment is exciting because of the widespread access to technology.

These kinds of tools were previously accessible only to large studios and research institutions. Individual creators can now generate complex 3D content that would have required teams of skilled artists. The work that once took weeks can now be completed quickly. The barrier to entry has dropped dramatically, while the quality ceiling continues to increase. The future belongs to the creators, who adopt this kind of tool while understanding their limitations and capabilities.

The next generation of 3D content will emerge from the intersection of AI assistance and human creativity. For businesses looking to implement these technologies at scale, collaborating with an experienced AI software development company, making sure successful integration and optimal results. The tools are here, they’re accessible and they’re powerful enough to transform your creative vision into reality.

Frequently Asked Questions For 3D AI Image Generation

How to Generate 3D Model From Images Using Open-Source AI?

Install your chosen model locally or use cloud platforms like Google Collab. Input a high-quality images for best results. Most models require basic Python knowledge and GPU access.

Can I Use AI to Generate 3D Models?

Yes, multiple open-source AI models like Meshy AI and Point-E generate professional 3D models from text or images. These tools work on consumer hardware with modern GPUs.

Why Use Open-Source AI Models for 3D Generation?

Open-source models provide cost-free access, full customization capabilities and no licensing restrictions. They offer enterprise-level quality without expensive software subscriptions.

What Are the Best Open-Source AI Models for 3D Generation?

Meshy AI excels for game assets, Point-E gives speed, and stable diffusion 3D provides artistic control. Choose based on your specific output requirements and technical constraints.

Can I use These Models for Commercial 3D Design Work?

Most open-source 3D AI models allow commercial use without restrictions. Always verify specific licensing terms, as some models like DALL-E 3 have usage limitations.