Quick Summary: This guide explains how to create an AI system by covering use-case selection, data strategy, model choice, infrastructure planning, integration, compliance, and deployment. It focuses on practical steps that drive measurable business value in 2026 and beyond.

For any organization looking to move ahead in 2026 and beyond, incorporating AI in some form will become part of its strategy. Whether it is automating advanced workflows, assessing large data streams, or creating intelligent products and decision-making processes systems, AI has already proven that it is a core competency and not a specialist domain. However, creating your own AI solution is not only about incorporating an AI model or a new tool. It is about having a clear business objective, the right data strategy and system architecture, and a smooth shift from proof of concept to production.

This guide provides a step-by-step process for enterprises that want to know: how to create an AI system?

We will discuss real-world user considerations, including use-case selection, data availability, model options, infrastructure, integration, and long-term scalability, so that organizations can overcome testing challenges with AI systems that deliver viable returns and measurable business impact in 2026 and beyond.

Who this guide is for

- Business executives are assessing AI as a fundamental means of increasing revenue,productivity, or effective decision-making

- The product, engineering, and data departments are moving AI from pilot testing to a production environment

- Organizations developing custom AI solutions with deeper functionality than merely automating routine tasks

- Decision makers are accountable for the return on investment (ROI) and the scalability of their AI systems, as well as for their long-term use.

Understanding the Basics of AI: Types of Artificial Intelligence Solutions

Artificial intelligence allows machines to execute tasks that typically require human intelligence. These tasks include recognizing patterns, making decisions, processing language, and tackling complex problems. Generally, there are three types of AI systems: ANI vs AGI vs ASI

- Artificial Narrow Intelligence (ANI), also called Weak AI, this type excels at specific tasks. Virtual assistants like Siri, Netflix recommendation engines, and chatbots fall into this category. ANI systems power most current commercial applications.

- Artificial General Intelligence (AGI): This conceptual Strong AI would match human-level intelligence across all domains. AGI could reason, solve problems, and learn new tasks without extensive retraining. While still in development, advances in machine learning bring us closer each year.

- Artificial Superintelligence (ASI): This concept of the future represents AI that surpasses human intelligence across all fields. ASI remains theoretical yet drives important conversations about AI ethics and safety.

Most businesses today work with ANI systems. These focused tools deliver measurable results without requiring bleeding-edge technology. Get professional AI consulting services to get the most out of your AI investments.

The Global AI Landscape: Market Overview and Trends

Market Growth Path

Here are some important artificial intelligence statistics. The AI software market shows explosive growth across all sectors:

| Year | Market Size | Key Milestone |

| 2024 | $638 billion | 78% of companies use AI in at least one function |

| 2025 | $757.58 billion | Generative AI reaches 75% adoption rate |

| 2030 | $826.7 billion to $1.24 trillion | Expected CAGR of 26-33% |

| 2034 | $3.68 trillion | Healthcare AI grows at 36.8% CAGR |

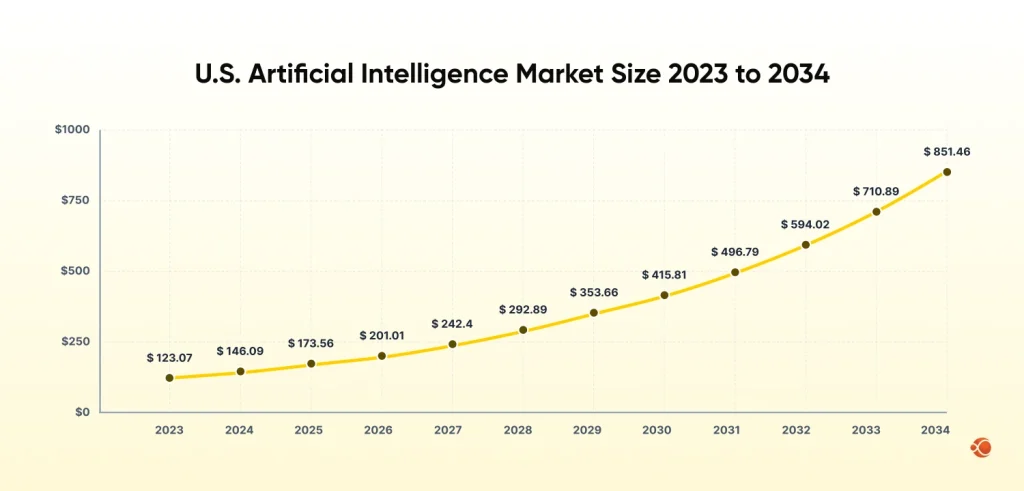

North America leads with 36.9% market share in 2024, though Asia-Pacific shows the fastest growth at 19.8% CAGR. The United States alone accounts for $146 billion in AI market value projected to grow till $851.46 by 2034.

What are the Critical Elements To Create An AI System

1. High-Quality Data

Data forms the foundation of every AI system. Success depends on:

- Volume: In general, thousands to millions of examples are usually needed to find accurate models for complex tasks.

- Quality: Clean, accurate, and representative data is better than large amounts of inaccurate data. Duplicate records need to be removed, null values need to be handled, and inaccurate records need to be corrected systematically.

- Relevance: Data must reflect the practical situations in which your AI will operate. Outdated or biased data produces unreliable systems.

- Diversity: includes boundary cases and variations. Homogeneous data creates AI that fails when conditions change.

2. Computing Resources

The development of AI requires a lot of computing resources, particularly during the training process:

- Development Phase: For initial development, most small AI projects will take place on standard personal and/or workstations. In contrast, complex/deep learning AI projects will likely require special GPU(s) (Graphics Processing Units), because GPUs work best with many calculations in parallel.

- Production Phase: During deployment, cloud computing services (such as AWS (Amazon Web Services), Google Cloud, Microsoft Azure, etc.) provide the most flexible computing resources. Given that you can adjust your computing resources based on usage, during heavy training periods, you can rent more resources, and during lighter training periods, you can rent fewer.

- Expense Factors: The cost of GPUs can range from a few hundred to a few thousand dollars per month. Cloud computing offers flexibility but requires ongoing monitoring to manage costs.

3. Development Tools and Frameworks

| Category | Technology / Concept | Description |

| Machine Learning Frameworks | TensorFlow | Google’s flexible platform for building machine learning models. |

| PyTorch | Facebook’s framework that’s popular for both research and production. | |

| Scikit-learn | Great for implementing traditional machine learning algorithms. | |

| Other Programming Languages | R | Often used for statistical analysis and academic research. |

| Julia | Focused on high-performance computing and numerical analysis. | |

| Java/C++ | Ideal for production systems where maximum speed is required. | |

| No-Code Platforms | Google AutoML | Makes it easy for businesses to create AI models without needing coding skills. |

| Microsoft Azure Machine Learning | A platform that allows users to build and deploy machine learning models. | |

| IBM Watson | Offers AI tools and services tailored to business needs. | |

| Amazon SageMaker | Provides everything needed to build and deploy machine learning models. | |

| Domain Expertise | High-impact use cases | Identifying key areas where AI can have a significant impact. |

| Success metrics | Setting clear, measurable goals to track AI project success. | |

| Data collection strategies | Planning how to gather the right data for model training. | |

| Model output evaluation | Ensuring that AI models’ results make sense in real-world applications. | |

| Compliance with industry regulations | Ensuring AI models meet industry standards and legal requirements. |

We build the best custom AI agents for industries like Fintech, Ecommerce, Healthcare, and more.

Explore NowStep-by-Step Process: How to Create an AI System from Scratch

Phase 1: Problem Definition and Feasibility Assessment

The first step towards AI MVP development services is to identify the specific problem your AI application will solve. An ill-defined statement will not only leave your AI application unable to resolve one or more specific issues but also create a situation where those issues cannot be clarified. When writing your proposal to develop an AI project, you should state very clearly the Business Function (or part of the Business Function) on which you are trying to make a change, as well as what decision you plan to automate.

Key Questions to Answer:

- What specific pain point will this AI solve? (e.g., “Reduce customer churn by 20%”, not “Improve customer experience”)

- What is the current manual process cost per transaction?

- Do you have historical data representing the problem space?

- What is the minimum accuracy threshold for business viability? (e.g., 85% precision for fraud detection)

Feasibility Checklist:

- Data Availability: Do you have 10,000+ labeled data points available for supervised learning?

- Complexity Assessment: Can rules-based automation solve this problem more cheaply than AI

- Constraint Mapping: Establishing budget and time limits (MVP vs. production) and regulatory requirements (GDPR, HIPAA).

Phase 2: Data Architecture and Preparation

Next, for any successful AI system implementation, the quality of the training data is essential. This phase usually requires 60-70% of the development time.

Data Collection Strategy: We would need to source the data from various channels to make the data diverse:

- Internal databases (CRM, ERP systems, transaction logs, etc.)

- IoT sensor data

- User engagements (clickstream data, support requests, etc.)

Data Cleaning

| Step | Data Preprocessing Task | What It Means | Tools You Can Use |

| 1 | Remove duplicate records |

| Excel, Pandas, SQL |

| 2 | Handle missing values |

| Pandas, NumPy, Excel |

| 3 | Detect and treat outliers |

| Pandas, NumPy, SciPy |

| 4 | Standardize data formats |

| Pandas, Python datetime |

| 5 | Validate data consistency |

| Pandera, Great Expectations |

| 6 | Scale numerical features |

| Scikit-learn, Pandas |

| 7 | Verify labels and bias |

| Scikit-learn, Fairlearn |

Data Validation:

Next, it is important to split your data before training:

- Training Set (70-80%, Pattern Detection): This is the largest part of your data and the foundation for your model’s learning. The training set is where your model learns how inputs are related to outputs. The training set detects patterns, relationships, and trends from your data. The quality of your training set will directly affect your model’s ability to learn the problem.

- Validation Set (10-15%, Parameter Tuning): The validation set helps with AI model fine-tuning services before it is exposed to the final test data. The validation set is used to adjust hyperparameters, including the learning rate, the number of layers, and the regularization strength. This is to avoid overfitting, where the model performs smoothly on the training data but fails to perform efficiently on new data.

- Test Set (10-15%, Unbiased): The test set will be used only once, after training and validation are complete. It provides an unbiased evaluation of the model’s real-world performance. Since the model has never seen such data before, test results provide the most accurate picture of accuracy, precision, and reliability.

Implement data lineage tracking to trace the transformation of raw data into the model’s training data.

Phase 3: Different Technologies Used to Build AI Systems

| Programming Language | Ideal AI System Use Case |

| Python | Used to create AI systems quickly, especially for NLP, vision, and general ML tasks |

| R | Helpful for AI systems focused on statistical modeling and research |

| Julia | Chosen to build AI systems that need heavy numerical computing and simulations |

| C++ | Preferred for AI systems requiring real-time inference and low latency |

| Java / Scala | Commonly used to create AI systems integrated into enterprise and big data platforms |

Infrastructure Decisions:

- Cloud vs. On-Premise: Cloud (AWS/Azure/GCP) offers scalability but with ongoing expenses. On-premises is for highly confidential information or applications that require a low (<10ms) response time.

- Compute Needs: GPU availability (NVIDIA A100s/V100s). Conventional ML/AI requires CPU clusters.

- Storage: Data lakes (S3, Azure Data Lake) for unstructured information. Data warehouses (Snowflake, BigQuery) for structured analysis.

Phase 4: Algorithm Construction Model Training

Select algorithms based on your problem:

| Problem Type | Algorithm Options | When to Use |

| Classification (e.g., spam detection) | Random Forest, SVM, Logistic Regression, Neural Networks | Random Forest for tabular data; Neural Networks for image/text |

| Regression (e.g., price prediction) | Linear Regression, XGBoost, LSTM networks | XGBoost for structured data; LSTM for time series |

| Clustering (e.g., customer segmentation) | K-Means, DBSCAN, Hierarchical Clustering | DBSCAN for irregular cluster shapes; K-Means for spherical clusters |

| Natural Language Processing | Transformer models (BERT, GPT), LSTM, RNNs | Transformers for understanding context; LSTM for sequence generation |

| Computer Vision | CNN (ResNet, YOLO), Vision Transformers | YOLO for real-time object detection; ResNet for image classification |

Training Process:

- Baseline Model: Start with a basic model, such as Logistic Regression or Random Forests. This provides a foundation to gauge the quality of the data before adding complexity.

- Feature Engineering: Develop new, valuable features from existing data. For instance, derive the day of the week from a date/time field or determine the number of words in a text feature to provide additional context to the model.

- Parameter Tuning: Adjust the model’s parameters to improve performance.

Techniques such as grid search or optimization algorithms can be used to determine optimal parameter values, such as the learning rate, batch size, or tree depth. - Cross-Validation: Divide the data into several chunks and train the model multiple times.

This ensures that the model generalizes well across different data chunks, not just a single split.

Preventing Overfitting:

- Apply L1/regularization to regularize complex models

- Dropout layers in neural networks (randomly turn off 20-50% of the neurons during training)

- Early stopping: Stop training when the validation error stops decreasing.

Connect with AI experts who help businesses plan, build, and implement practical AI systems with measurable outcomes.

Contact UsPhase 5: Testing and Validation

Testing Layers:

| Testing Type | Purpose | What Is Evaluated | Metrics / Measures |

| Unit Testing | Check individual parts in isolation | Data preprocessing functions, feature logic, model components | Output correctness, edge cases |

| Integration Testing | Ensure components work together | API endpoints, database connections, system behavior under load | Stability, response time, failure handling |

| Model Validation | Assess model performance beyond accuracy | Model predictions vs actual outcomes | Precision, Recall, F1 Score, AUC-ROC, RMSE |

Shadow Deployment: Deploy the AI system concurrently with existing processes for 2-4 weeks. Compare the AI system’s predictions with human or existing system decisions without impacting the production environment. Drift between the training data sets and the live data can be measured.

Phase 6: Deployment Architecture

Move from experimentation to production.

Deployment Patterns:

- Batch Processing

Used when results are not needed instantly.

Runs on a schedule, like nightly customer segmentation. - Real-Time API

Used when predictions must be fast.

The model is exposed through an API and responds in milliseconds. - Edge Deployment

Used on mobile devices or sensors.

Models are made smaller so they work with limited internet and hardware.

MLOps Infrastructure: Implement CI/CD pipelines for machine learning:

- Continuous Integration

Automatically tests data, models, and code whenever changes are made. - Continuous Deployment

Pushes models to production automatically once they meet quality standards. - Model Registry

Keeps track of model versions, experiments, and past results.

Scaling Strategies:

- Horizontal Scaling

Use multiple instances of the model to serve more users simultaneously. - Caching

The store frequently made predictions so that the model doesn’t have to repeat the same calculations. - Model Optimization

Optimize models to reduce size and improve performance without sacrificing accuracy.

Phase 7: Monitoring and Maintenance

AI systems degrade over time as real-world data differs from training data (concept drift).

Monitoring Metrics:

Keeping track of how your AI system is performing is very important. One key thing to watch for is data drift, which occurs when the incoming data differs from the data the model was trained on. You should also check how well the model itself is working. Important metrics to monitor include accuracy, response time, and the number of requests the model can handle.

Maintenance Schedule:

- Weekly: Review failed predictions and edge cases.

- Monthly: Retrain models with new data if accuracy drops below thresholds.

- Quarterly: Full model audit, examine for bias, security vulnerabilities, and compliance with updated regulations.

Feedback Loops: Implement human-in-the-loop systems that route low-confidence predictions (<70% probability) to human reviewers. Use the corrections as new training data to continuously improve the model.

What are The Common AI Implementation Pitfalls

Data Leakage:

- Do not include future data in training data.

- Ensure training timestamps precede target variable timestamps.

Training-Serving Skew:

- Keep preprocessing consistent between training and production.

- Use the same transformation pipelines (TF Transform or Scikit-learn).

Underestimating Latency:

- Complex models can take a long time to make predictions.

- Test inference speed early and distill or quantize if necessary.

Ignoring Edge Cases:

- Models will fail on data outside the training data.

- Provide fallback behavior for predictions with low confidence.

Security Oversights:

- Protect against attacks like model inversion and adversarial examples.

- Implement input validation and API rate limiting.

Comparing Custom AI vs Ready-Made AI Platforms

| Factor | Ready-Made AI Platforms | Build AI Platform |

| Time to Market | Very fast | Moderate to slow |

| Upfront Cost | Low | Higher |

| Long-Term Cost | Can grow quickly | Optimizable over time |

| Customization | Limited | Full |

| Vendor Lock-In | High | Low |

| Performance Optimization | Restricted | Fine-grained |

| Competitive Differentiation | Low | High |

| Best For | MVPs, internal tools, standard use cases | Core products, proprietary workflows |

One of the most common questions organizations face when developing AI solutions is whether to build an in-house AI solution or leverage an existing AI platform. There is no right or wrong answer to this question. It all depends on the business’s maturity level.

When Ready-Made Platforms Make Sense

Ready-made platforms work best when:

- The use case is standardized (chatbots, document classification, demand forecasting)

- Time-to-market is more important than customization-to-market matters more than deep customization

- You have limited custom AI agent development expertise

| Advantages | Limitations |

| Fast deployment with minimal setup | Limited control over model behavior and architecture |

| Built-in scalability and security | Vendor lock-in risk over time |

| Lower upfront development cost | Less flexibility for custom workflows or edge cases |

| Integrated monitoring, MLOps, and compliance tools | Difficult to deeply optimize costs at scale |

| Reduced maintenance effort | Hard to differentiate when competitors use the same tools |

Custom AI Development: Control, Differentiation, and Long-Term Value

Custom AI development is when you design and create your own artificial intelligence. This refers to the development of your own artificial intelligence. This is customized to your business’s data, workflows, and goals. This is because AI is considered a product capability and not a tool. For high-level implementation and accurate results, companies often hire AI developers who have experience implementing AI in their specific industries and business scale.

Custom development is the right path when:

- AI impacts revenue, risk, or customer experience directly

- Proprietary data is a valuable asset

- Business logic is complex or non-standard

- Performance, latency, or accuracy requirements are strict

- Cost optimization over the long term is important at scale

- AI is the key to competitive differentiation

| Advantages | Challenges |

| Full control over models, data pipelines, and system behavior | Higher upfront development cost |

| Fine-tune models for industry-specific edge cases | Requires skilled AI engineers and architects |

| Better cost efficiency at scale | Longer time to reach production |

| Easier integration with existing internal systems | Ongoing maintenance responsibility |

| Reduced reliance on third-party platforms | Compliance and monitoring handled in-house |

| Stronger ownership of intellectual property |

Important insight:

Custom AI does not always mean building everything from scratch. Many successful systems combine open-source models, pre-trained foundations, and proprietary layers to regulate speed and control.

AI Trends Shaping 2026: Key Shifts for Businesses

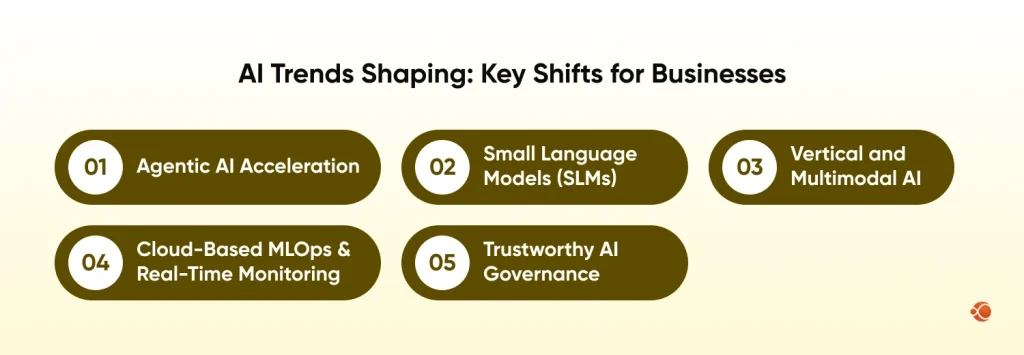

As businesses build AI systems in 2026, leading the way entails embracing trends like agentic AI, governance mandates, and efficient MLOps. These drive 37% CAGR in MLOps markets alone.

1. Agentic AI Acceleration

Across various industries, AI agents that can work autonomously are becoming more popular. These autonomous agents will be able to execute complex, multi-step tasks without the need for human involvement. By allowing teams to pay attention to higher-level work, these agents are transforming how companies solve problems and automate processes.

2. Small Language Models (SLMs)

Many AI applications currently use small language models as their preferred model. They run more quickly, require less space, and can be easily added to a current application. Their size also allows these models to be practically used in the real world without sacrificing performance.

3. Vertical and Multimodal AI

Commercial businesses are seeing great success with vertical AI models that work with text, voice, and visual inputs. Because AI models understand three types of data, they create your AI models that are personalized and context-aware, increasing user engagement and satisfaction.

4. Cloud-Based MLOps & Real-Time Monitoring

Modern AI solutions leverage cloud-native architectures and advanced monitoring and real-time observability to create a self-healing, scalable pipeline that adapts to changing workloads and ensures robust, reliable AI operations.

5. Trustworthy AI Governance

Ethics, bias mitigation, and regulatory compliance are now central to AI adoption. Implementing strong governance ensures AI systems are fair, transparent, and reliable, fostering trust among users and stakeholders while minimizing operational and reputational risks.

Why Choose CMARIX for Custom AI Software Development

At CMARIX, as a leading AI software development company, we develop customized AI solutions that address real business issues while remaining flexible for the future. Our strategy combines advanced technical knowledge, effective AI use, and a strong emphasis on outcomes. Every solution we develop is designed to scale and integrate perfectly to create long-term value for business growth.

- Expert AI Engineering: We handle everything from data setup to model building, deployment, and system integration.

- Business-Focused Solutions: AI is built to solve real problems, reduce manual work, and improve results.

- Full AI System Lifecycle Management: We manage the entire AI lifecycle, from design through implementation and all phases of enhancement.

- Scalable and Secure Systems: All of our AI software development services are built for both scalability and security, ensuring compliance with your compliance obligations.

- Modern AI Tools & Frameworks: We use proven tools like Python, cloud platforms, LLMs, and MLOps pipelines.

- Transparent Collaboration: Clear timelines, regular progress updates, and shared ownership of delivery.

- Future-Ready AI: Solutions are easy to maintain and adapt as business needs change.

- Expertise of AI in UX Design: We make use of AI for creating scalable, adaptable, and modern UI/UX.

Conclusion

Creating an AI system in 2026 requires the right goals, high-quality data, and a scalable, secure architecture. By integrating strategic planning, AI, and custom solutions, organizations can develop intelligent systems that deliver tangible results and long-term value.

FAQs on How to Build an AI System

What Is An AI System?

An AI system is a software solution that uses data, algorithms, and models to learn patterns, make decisions, and automate tasks with minimal human intervention.

How To Create An AI From Scratch?

Creating AI from scratch involves defining objectives, collecting relevant data, training models, validating results, deploying the system, and continuously improving accuracy and performance.

What Programming Languages Are Best For Building AI?

Python is widely used for AI, while Java, R, C++, and JavaScript support scalable systems, statistical modeling, performance optimization, and AI-driven web applications.

What Tools And Frameworks Are Used To Develop AI Systems?

AI development relies on frameworks like TensorFlow, PyTorch, Scikit-learn, OpenCV, Hugging Face, and cloud AI services for training, deployment, and lifecycle management.

How Much Does It Cost To Develop An AI System?

AI development costs depend on scope, data complexity, infrastructure, integrations, and maintenance, ranging from low-cost prototypes to high-investment enterprise AI platforms.

What Are Common Challenges In AI System Development?

Challenges include acquiring quality data, reducing bias, ensuring scalability, managing security risks, integrating systems, controlling costs, and maintaining long-term model performance.