Quick Summary: This guide explores how to build AI apps with Node.js, highlighting its speed, scalability, and event-driven architecture. We will cover key benefits, top AI libraries like TensorFlow.js, Brain.js, and LangChain.js, and practical implementations such as chatbots, predictive analytics, recommendation engines, and intelligent automation.

Node.js is popular for its speed, scalability, and event-driven architecture. It has emerged as a popular choice for AI application development. While Python has led the AI landscape, Node.js provides a smooth way to integrate AI into an app. It is an ideal platform for building developers aiming to build real-time, AI-powered applications. According to Stack Overflow’s 2025 Developer Survey, Node.js ranks as the top programming language.

Why Build AI Apps with Node.js?

Node.js has emerged as a framework for building intelligent, high-performing applications that demand real-time interactions and scalability. It follows an asynchronous, event-driven nature. It allows developers to process large data streams and AI model responses effectively. These are a few of its features that make it the most preferred programming language for AI application development.

Benefits of Node.js AI App Development

- High Performance and Scalability: Node.js functions on the V8 JavaScript engine. It allows it to compile JavaScript directly into machine code. This speeds up execution and ensures better scalability.

- Non-Blocking Asynchronous Node.js Microservices Architecture: Node.js follows a non-blocking I/O model that allows Node.js developers to manage multiple AI-driven tasks simultaneously without worrying about performance limitations.

- Rich Ecosystem of AI Libraries and Packages: Node.js has one of the largest software registries, holding more than 2 million packages. The same rich npm registry has many dedicated AI and ML libraries like Brain.js , Synaptic, and Tensorflow.js.

- Smooth Integration with AI APIs and Services: One of the primary benefits that makes Node.js ideal for AI software development services is its ability to integrate with third-party AI APIs such as Node.js Open AI integration, Google Cloud AI, and AWS Machine Learning.

- Cross-Platform Compatibility: Node.js supports various platforms, allowing teams to build cross-platform AI-driven applications that can smoothly run on web, desktop, and mobile environments.

- Strongest Community and Enterprise Support: Node.js has one of the most active developer communities. It is a treasure trove for entry-level Node.js developers as well as senior developers who need new tools to streamline their routine tasks.

- Unified Development Experience: One of the biggest benefits of building AI apps with Node.js also comes down to the uniformity of backend and frontend running on JavaScript. Businesses can hire Node.js developers to work on the entire application with a consistent codebase.

Getting Started with AI in Node.js Using TensorFlow.js

Node.js is becoming increasingly popular for integrating AI into web and server applications. With TensorFlow.js, you can make use of JavaScript to build AI applications, whether for object detection, image classification, or other machine learning tasks. This guide walks through using TensorFlow.js in Node.js, covering both pre-packaged models and direct TensorFlow.js models.

Prerequisites

Before starting, make sure the following are ready:

- Basic understanding of Node.js

- Familiarity with Node.js AI machine learning integration concepts

- A development machine running Linux®, macOS, or Windows® with:

- Node.js installed

- Visual Studio Code (or another editor)

- Python environment

- Xcode installed (macOS only)

Step 1: Initialize a TensorFlow.js Project

Start by creating a new Node.js project:

mkdir tfjs-demo

cd tfjs-demo

npm init -y

npm install @tensorflow/tfjs-node

If you have a CUDA-enabled GPU, you can install the GPU version:

npm install @tensorflow/tfjs-node-gpuNote: CUDA Toolkit and cuDNN must also be installed for GPU usage.

Step 2: Running a Pre-Packaged Model (COCO-SSD)

TensorFlow.js provides several pre-trained models that simplify development. In this example, we’ll use the COCO-SSD object detection model.

Install the COCO-SSD Model

npm install @tensorflow-models/coco-ssdCreate an Object Detection Script

Create a file called index.js and add the following:

const cocoSsd = require('@tensorflow-models/coco-ssd');

const tf = require('@tensorflow/tfjs-node');

const fs = require('fs').promises;

// Load the model and image

Promise.all([cocoSsd.load(), fs.readFile('image1.jpg')]) .then(([model, imageBuffer]) => { const imageTensor = tf.node.decodeImage(new Uint8Array(imageBuffer), 3); return model.detect(imageTensor); }) .then(predictions => { console.log(JSON.stringify(predictions, null, 2)); });Run the Script

node.You should see predictions showing detected objects, their bounding boxes, and confidence scores.

How the Code Works

- Import Modules: coco-ssd for object detection, tfjs-node for Tensor support, and fs to read image files.

- Load Model and Image: Use Promise.all() to load both asynchronously.

- Decode Image: Convert image buffer into a 3D Tensor.

- Run Detection: Call detect() to get predictions.

- Output: Predictions are displayed as JSON.

Step 3: Running a TensorFlow.js Model Directly

You can also load models from TensorFlow Hub or local JSON without using pre-packaged APIs. Here, we use COCO-SSD as a GraphModel from TensorFlow Hub.

Load the Model from TensorFlow Hub

Create a file called run-tfjs-model.js:

const tf = require('@tensorflow/tfjs-node');

const fs = require('fs');

const modelUrl = 'https://tfhub.dev/tensorflow/tfjs-model/ssdlite_mobilenet_v2/1/default/1';

let detectionModel;

const loadDetectionModel = async () => { console.log(`Loading model from ${modelUrl}`); detectionModel = await tf.loadGraphModel(modelUrl, { fromTFHub: true }); return detectionModel;

};Preprocess Input Images

const preprocessImage = (imagePath) => { console.log(`Processing image: ${imagePath}`); const imgBuffer = fs.readFileSync(imagePath); const uint8array = new Uint8Array(imgBuffer); return tf.node.decodeImage(uint8array, 3).expandDims();

};Run Inference

const runInference = (inputTensor) => { console.log('Running inference...'); return detectionModel.executeAsync(inputTensor);

};Process Model Output

We extract classes and scores, apply Non-Maximum Suppression (NMS), and format predictions:

const labels = require('./labels.js');

let imgHeight = 1, imgWidth = 1;

const extractMaxScores = (scoreTensor) => { const scores = scoreTensor.dataSync(); const maxScores = []; const classes = []; const [numBoxes, numClasses] = [scoreTensor.shape[1], scoreTensor.shape[2]]; for (let i = 0; i < numBoxes; i++) { let maxScore = -1, classIndex = -1; for (let j = 0; j < numClasses; j++) { const idx = i * numClasses + j; if (scores[idx] > maxScore) { maxScore = scores[idx]; classIndex = j; } } maxScores[i] = maxScore; classes[i] = classIndex; } return [maxScores, classes];

};

const performNMS = (boxes, maxScores) => { const boxesTensor = tf.tensor2d(boxes.dataSync(), [boxes.shape[1], boxes.shape[3]]); const indexTensor = tf.image.nonMaxSuppression(boxesTensor, maxScores, 5, 0.5, 0.5); return indexTensor.dataSync();

};

const buildJSONResponse = (boxes, scores, indexes, classes) => { const result = []; for (let i = 0; i < indexes.length; i++) { const bbox = []; for (let j = 0; j < 4; j++) { bbox[j] = boxes[indexes[i] * 4 + j]; } result.push({ bbox: [ bbox[1] * imgWidth, bbox[0] * imgHeight, bbox[3] * imgWidth, bbox[2] * imgHeight ], label: labels[classes[indexes[i]]], score: scores[indexes[i]] }); } return result;

};

const processModelOutput = (prediction) => { const [maxScores, classes] = extractMaxScores(prediction[0]); const indexes = performNMS(prediction[1], maxScores); return buildJSONResponse(prediction[1].dataSync(), maxScores, indexes, classes);

};Full Run Script

if (process.argv.length < 3) { console.log('Please provide an image path. Example:'); console.log(' node run-tfjs-model.js /path/to/image.jpg');

} else { const imagePath = process.argv[2]; loadDetectionModel() .then(model => { const inputTensor = preprocessImage(imagePath); imgHeight = inputTensor.shape[1]; imgWidth = inputTensor.shape[2]; return runInference(inputTensor); }) .then(prediction => { const output = processModelOutput(prediction); console.log(output); });

}Run the script:

node run-tfjs-model.js image1.jpgThe result is a JSON array showing detected objects, their scores, and bounding boxes.

Optional Enhancement: Annotate Images

To visualize predictions on images:

npm install @codait/max-visAdd the following:

const maxvis = require('@codait/max-vis');

const path = require('path');

const annotateImage = (predictions, imagePath) => { maxvis.annotate(predictions, imagePath) .then(buffer => { const fileName = path.join(path.parse(imagePath).dir, `${path.parse(imagePath).name}-annotated.png`); fs.writeFile(fileName, buffer, (err) => { if (err) console.error(err); else console.log(`Annotated image saved as ${fileName}`); }); });

};Call annotateImage(output, imagePath); after processing predictions to generate an image with bounding boxes.

Best Node.js Libraries for AI Development

| Library | Best For / Key Uses |

| TensorFlow.js |

|

| Brain.js |

|

| Synaptic |

|

| Natural |

|

| LangChain.js |

|

| Transformers.js |

|

| ml5.js |

|

TensorFlow.js (tfjs-node)

TensorFlow.js brings Google’s battle-tested ML stack to Node with native C++ bindings and CUDA acceleration, letting you train or fine-tune heavyweight models in JavaScript without ever touching Python. Its model converter imports existing SavedModels or Keras files, while the tfhub.dev ecosystem offers thousands of ready-to-run vision, NLP and audio nets. In benchmarks, tfjs-node often outran Python on small-batch inference thanks to libtensorflow’s multithreaded backend.

Key features

- Same API as Python TF; eager & graph execution

- GPU via @tensorflow/tfjs-node-gpu (CUDA 11+)

- Pre-trained hub, converter, quantization, tensorboard integration

- Best for: production services, CV, deep transfer-learning, teams that already know TensorFlow

Brain.js

Brain.js is the “zero-config” neural-network library, one require() and you’re building feed-forward, LSTM or GRU nets in plain JS. It trains entirely in CPU (WASM fallback in browser), so it’s perfect for lightweight bots, prototypes, or edge devices where GPUs aren’t available. Despite its simplicity, it still offers streams, JSON serialization, and cross-validation.

Key features

- LSTM/GRU, feed-forward, RNNTimeStep, XOR demo in 5 lines

- CPU only, no native deps, WASM for browser parity

- Built-in k-fold, serialization, and incremental training

- Best for: quick prototypes, embedded rules, teaching demos

Synaptic

Synaptic is a research-oriented “neural-network laboratory” that exposes low-level components, layers, connections, trainers, and architectures, so you can invent new algorithms without leaving JavaScript. It ships with classics like Hopfield, LSTM, and Liquid State Machines, plus a built-in genetic/evolutionary trainer. Everything is vanilla ES6, so it runs unchanged in Node, Deno, or the browser.

Key features

- Architect.* presets + DIY Network.fromJSON/toJSON

- Trainers: back-prop, genetic, simulated annealing

- No dependencies, MIT, great for academic tinkering

- Best for: custom architectures, algorithm experiments, educational projects

Natural

Natural gives you the classic NLP toolbox, tokenizers, stemmers, TF-IDF, Naïve Bayes, sentiment, WordNet, phonetics, n-grams, in one npm install. It’s pure JavaScript, so you can bundle it into serverless functions or Electron apps without native headaches. While it won’t train BERT, it’s unbeatable for rule-based or classical machine learning in Node.js text pipelines.

Key features

- 40+ languages, Porter/Lancaster stemmers, double-metaphone

- Classifiers: Naïve Bayes, Logistic Regression, MaxEnt

- Sentiment analyzer, WordNet interface, tokenizer streaming

- Best for: Nodejs AI chatbots, search, spam filters, lightweight NLP micro-services

LangChain.js

LangChain.js is the JavaScript port of the orchestration framework that turned “LLM glue code” into composable chains. Load a local GGUF or remote Node.js OpenAI integration model, then add memory, retrieval, tools and agents via a fluent API that feels like jQuery for generative AI. It ships 100+ integrations—Supabase, Pinecone, Cheerio, CSV, SQL, Zapier, so you can ship RAG bots or autonomous agents in an afternoon.

Key features

- Chains, Agents, Retrieval, Memory, Output Parsers

- Works with local models (llama-node, transformers.js) or SaaS

- Tracing via LangSmith, streaming, structured output

- Best for: Q&A bots, autonomous agents, RAG pipelines, rapid LLM MVPs

Transformers.js

Transformers.js ports Hugging Face’s transformers to ONNX, giving you state-of-the-art BERT, Whisper, ViT, T5, Stable-Diffusion, etc., running entirely client-side or in Node with zero Python. Models are pre-converted and chunked so you lazy-load only the shards you need; inference uses ONNX-Runtime for CPU or WebGPU for near-native speed.

Key features

- 100+ ready pipelines: fill-mask, summarization, ASR, image-to-text

- Quantized int8/int4 models, half-size, 2-3× faster

- Works offline, no CUDA install, perfect for serverless

- Best for: private ML, edge functions, browser extensions, no-GPU servers

ml5.js

ml5.js wraps TensorFlow.js in an opinionated, beginner-friendly API that reduces pose detection to ml5.poseNet(video, modelLoaded). It bundles curated models for body, face, hand, sound, style-transfer and sentiment, plus utilities for data collection and transfer learning. While it targets the browser, all examples run under Node with a dummy canvas polyfill, making it a great teaching tool or rapid prototype kit.

Key features

- One-liners for PoseNet, BodyPix, FaceMesh, SoundClassifier, StyleTransfer

- Built-in transfer-learning helper (featureExtractor)

- Friendly docs, creative-coding community, p5.js integration

- Best for: interactive art, education, hack-days, quick CV demos

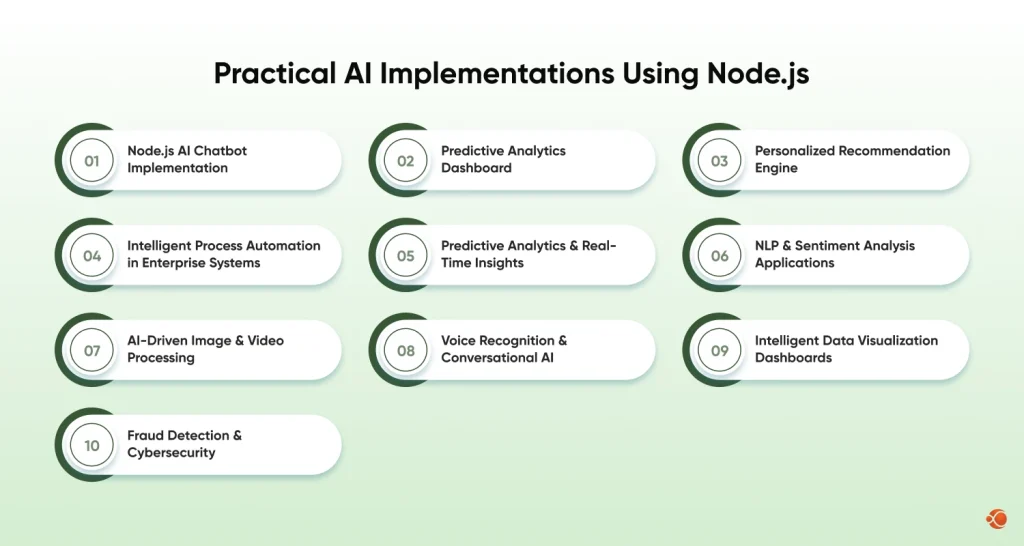

Practical AI Implementations Using Node.js

1. Node.js AI Chatbot Implementation

LangChain.js turns your Express server into an LLM-powered conversational endpoint: one chain handles context, memory, and tool-calling while Socket.IO streams answers back to any client. Because the whole flow is JavaScript, you can retrain embeddings, swap models, or add Redis-backed conversation history without leaving the Node ecosystem.

2. Predictive Analytics Dashboard

With tfjs-node you can train or refit linear, ARIMA, or deep models on CSV streams and immediately expose predictions through a WebSocket that ECharts consumes live. Danfo.js supplies Pandas-like wrangling in memory, so the same language that serves the HTTP routes also builds the feature pipeline, no Python microservice required.

3. Personalized Recommendation Engine

Matrix-factorization running in TensorFlow.js scores user-item vectors in under 20 ms, while RedisJSON keeps click vectors hot in RAM for real-time updates. If a new item appears, the service falls back to TF-IDF cosine similarity from the natural package, ensuring cold-start coverage without spinning up a separate Spark cluster.

4. Intelligent Process Automation in Enterprise Systems

By embedding Transformers.js TrOCR inside an n8n flow, Node can read invoices, apply JSON-logic decision tables, and drive Puppeteer through legacy SAP screens—all in one event loop. BullMQ guarantees each RPA job is retried and audited, so the same JavaScript codebase handles cognition, rules, and robotic clicks without external runtimes.

5. Predictive Analytics & Real-Time Insights

A KafkaJS consumer feeds micro-batches into a TensorFlow auto-encoder that flags anomalies in < 50 ms, then pushes scores back to Kafka and a live dashboard via WebSocket. Because the model is loaded natively in Node, you can hot-reload newer weights and update the threshold on the fly without dropping a single event.

6. NLP & Sentiment Analysis Applications

The 11 MB DistilBERT model from @xenova/transformers classifies text in 54 languages straight from your Express route, while the natural package adds stemmers and TF-IDF fallbacks for domain slang. Rate-limiting and helmet middleware protect the endpoint, letting you embed sentiment scoring into any form, chat, or ticketing system with one npm install.

7. AI-Driven Image & Video Processing

Sharp decodes frames, Transformers.js runs quantized YOLOX or CLIP, and ffmpeg-static re-encodes the annotated video, no OpenCV compilation needed. Whether you choose GPU-backed tfjs-node or CPU-only int8, the whole pipeline stays inside JavaScript, so you can deploy it as a Lambda layer or long-running container with identical code.

8. Voice Recognition & Conversational AI

Socket.IO pipes 16 kHz PCM from the browser to a Whisper tiny model (39 MB) that streams partial transcripts every two seconds; node-vad strips silence to save tokens. The resulting text lands back in LangChain for intent routing, giving you a full conversational stack without leaving the Node event loop.

9. Intelligent Data Visualization Dashboards

Node auto-generates Vega-Lite specs from Danfo dataframes and pushes incremental cubes through WebSocket, letting the front-end render 60 fps pan-and-zoom with zero hand-written D3. If tfjs-node spots hidden clusters, their centroids are added as a draggable layer for interactive exploration.

10. Fraud Detection & Cybersecurity

An isolation-forest model in tfjs-node scores each transaction in under 10 ms, while a Redis Bloom filter blocks known bad hashes at ingress. Graph features built with graphlib detect ring fraud. If latency spikes above 200 ms, the system fails and closes.

Best Practices for Implementing AI in Node JS

Use the Latest LTS Node

New long-term support releases bundle fresher V8 optimisations and updated native AI bindings that can shave 10 to 30 percent off inference time for free. Pin the exact version in an nvmrc file and rebuild any native modules after each upgrade to keep those gains.

Offload Heavy Work from the Event Loop

Model forward passes can hog the CPU for tens of milliseconds. Move them to worker threads or a pooled micro service so the main thread stays free to handle HTTP and WebSocket traffic without jitter.

Cache Predictions Aggressively

Store vectorised inputs, frequent scores, or even whole model outputs in Redis or an in-process LRU, but give each key a short TTL. This slashes latency and keeps you compliant when data retention rules change.

Keep Models Lean

Quantise weights to int8, prune unused layers, and stream model.json from disk or a CDN so cold start stays under a second. Your serverless instances will spin up fast and cost less.

Secure Model Artefacts

Treat bin files like secrets. Host them outside your repo, sign download URLs, and gate AI routes with JWT scopes so a leaked API key cannot dump months of fine-tuning work.

Version Everything Together

Tag the dataset, docker image, and model checksum under one semantic version. When accuracy drifts you can roll back with a single deploy instead of scrambling to retrain.

Stream Audio and Video Chunks

Pipe media frame by frame through WebSocket or WebRTC and run Whisper or YOLOX on partial data. Latency drops and you stay inside tight Lambda timeouts without buffering giant clips.

Monitor What Matters

Instrument P99 inference time, GPU memory, and prediction confidence. Set alerts so degradation is fixed before customers feel it, not after Twitter complains.

Separate Logic from Routes

Keep controllers thin. Put model orchestration in testable service classes that Jest can hit with mocked tensors. Your future self will appreciate the clarity when the codebase grows.

Fail Gracefully

Another best practices for developing web applications includes wrapping every model.predict in try catch and fall back to a simple rule or cached answer if latency spikes or RAM fills. Log the event and replay when the service is calm so users still get a response.

Why CMARIX for NodeJS Development Services?

CMARIX is one of the leading AI-driven software development companies in the UK, US, Germany, and other such countries. Our Nodejs development services include transforming complex AI models into real-time solutions that deliver measurable results. From chatbots to recommendation systems and other AI-driven products/services, we design all applications to be scalable, reliable, and tailored to specific needs.

Here are the key advantages of choosing CMARIX to hire AI developers:

- Connect with skilled Node.js developers for AI application development.

- Build software solutions in machine learning, NLP, computer vision, and recommendation engines.

- Get AI applications that are built to match specific industries and business needs.

- Make use of the best AI libraries and frameworks for scalable development.

Final Words

Node.js developers can create high-performance, real-time AI applications. It has a rich ecosystem, event-driven architecture, and smooth JavaScript integration. This makes it ideal for developing chatbots, analytics, and AI-powered software solutions. With CMARIX’s expertise, businesses can transform complex AI models into scalable, secure, and industry-focused applications that deliver measurable results.

FAQs to Build AI Apps with Node.js

Why use Node.js for AI development?

Node.js offers speed, scalability, and an event-driven architecture ideal for real-time AI apps. It allows handling multiple AI tasks simultaneously with non-blocking I/O. JavaScript compatibility enables unified frontend and backend development for smoother workflows.

Which Node.js libraries are best for AI?

Top AI libraries include TensorFlow.js for deep learning, Brain.js for lightweight neural networks, and Natural Language Processing (NLP). LangChain.js and Transformers.js enable advanced LLM and multi-modal AI implementations. Ml5.js is great for rapid prototyping and educational AI projects.

How do you integrate an AI model into a Node.js app?

You can import pre-trained models via libraries like TensorFlow.js or Transformers.js.Node.js APIs can connect with AI services like OpenAI, AWS ML, or Google Cloud AI. Use asynchronous calls and microservices to handle AI inference efficiently within the app.

Can I train a deep learning model with Node.js?

Yes, libraries like TensorFlow.js allow training and fine-tuning deep learning models entirely in Node.js. GPU acceleration via tfjs-node-gpu speeds up model training for large datasets. Node.js is suitable for lightweight models or rapid prototyping but Python may still be preferred for extremely large-scale training.

What deployment options are best for Node.js AI apps?

Node.js AI apps can be deployed on cloud platforms like AWS, Google Cloud, or Azure. Serverless functions, Docker containers, or traditional VM setups work for scalable deployment. WebSocket or API-based endpoints enable real-time AI inference across web and mobile clients.

Can I build multi-modal AI apps (text + images) with Node.js?

Yes, libraries like Transformers.js and TensorFlow.js support multi-modal AI workflows. You can make use of such apps to process text, images, and audio within the same Node.js environment. Node.js event-driven architecture ensures real-time processing for multi-modal applications.