Quick Summary: This blog dives into how Whisper AI Call Transcription for SaaS Apps helps teams capture every customer conversation, no matter the language or background noise. From setup to integration, it shows how to turn support calls into real-time insights, product feedback, and smarter decisions, without the manual grunt work.

Any customer service team handles hundreds of calls every week, but most of the valuable insights from those conversations disappear the moment they end. What if every customer complaint, feature request, and feedback could be captured, analyzed, and turned into actionable intelligence?

That’s exactly where Whisper AI call transcription for SaaS businesses, comes into play.

If you’re running a SaaS business, every customer conversation is a goldmine of insights waiting to be discovered. Whether you’re scaling mobile app development services or refining your customer success workflow, Whisper can help transcribe calls and transform your operations.

Understanding Automatic Speech Recognition (ASR)

Automatic Speech Recognition (ASR) turns spoken words into written text. It integrates machine learning, AI, and NLP to get an understanding of what people say, and transcribe it accurately. By analyzing audio signals and recognizing individual words, ASR systems can accurately convert speech into readable text, making it easier to interact with technology through voice.

At a high level, ASR systems work through several key processes: audio capture where microphones convert acoustic waves into electrical signals, followed by sophisticated processing that transforms these signals into transcribed text.

ASR technology, also known as speech-to-text or audio transcription, smoothens communication between computers and human users by permitting natural voice-based interactions. For SaaS companies, this technology represents a fundamental shift from manual note-taking to automated, comprehensive conversation capture that can scale with business growth.

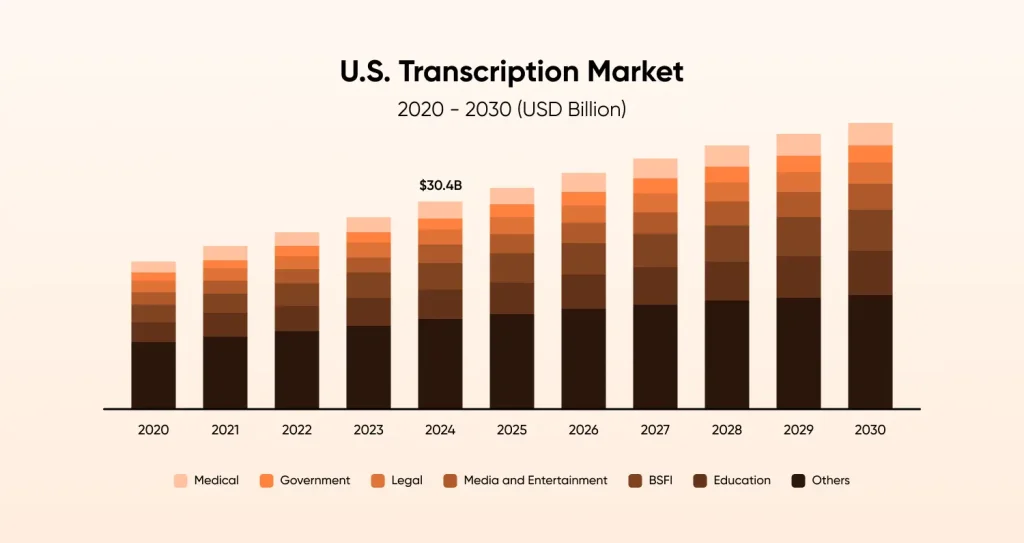

The Growing Popularity of US Transcription Market Size

The United States is showing unprecedented interest in the transcription services, with USD 30.42 billion valuation in 2024, with estimated CAGR of 5.2% from 2025 to 2030. The most popular industry that is using such transcription services in the US is healthcare.

Why SaaS Companies Are Turning to AI Call Transcription

The Hidden Challenge in Customer Support

Most SaaS companies track everything: user clicks, feature usage, subscription metrics, and conversion rates. But the richest source of customer intelligence often goes unanalyzed and that is what your customers actually say during support calls.

Traditional approaches to call analysis face problems such as:

- Manual note-taking during calls leads to missed details and inconsistent records

- Reviewing calls manually is time-consuming and covers only a small fraction of interactions

- Important feedback gets lost in email threads and scattered notes

- International customers may speak languages your support team doesn’t understand fluently

When Transcribe Customer Calls with Whisper Changes the Game

OpenAI’s Whisper represents a breakthrough in speech-to-text for SaaS apps. Unlike traditional transcription tools that struggle with real-world conditions, Whisper was trained on 680,000 hours of diverse audio from across the internet. This means it handles the messy realities of customer service calls: background noise, various accents, technical terminology, and multiple languages.

The result? A customer supports transcription software that actually works in the real world, not just in controlled laboratory conditions.

How Does Whisper Work Behind The Screen?

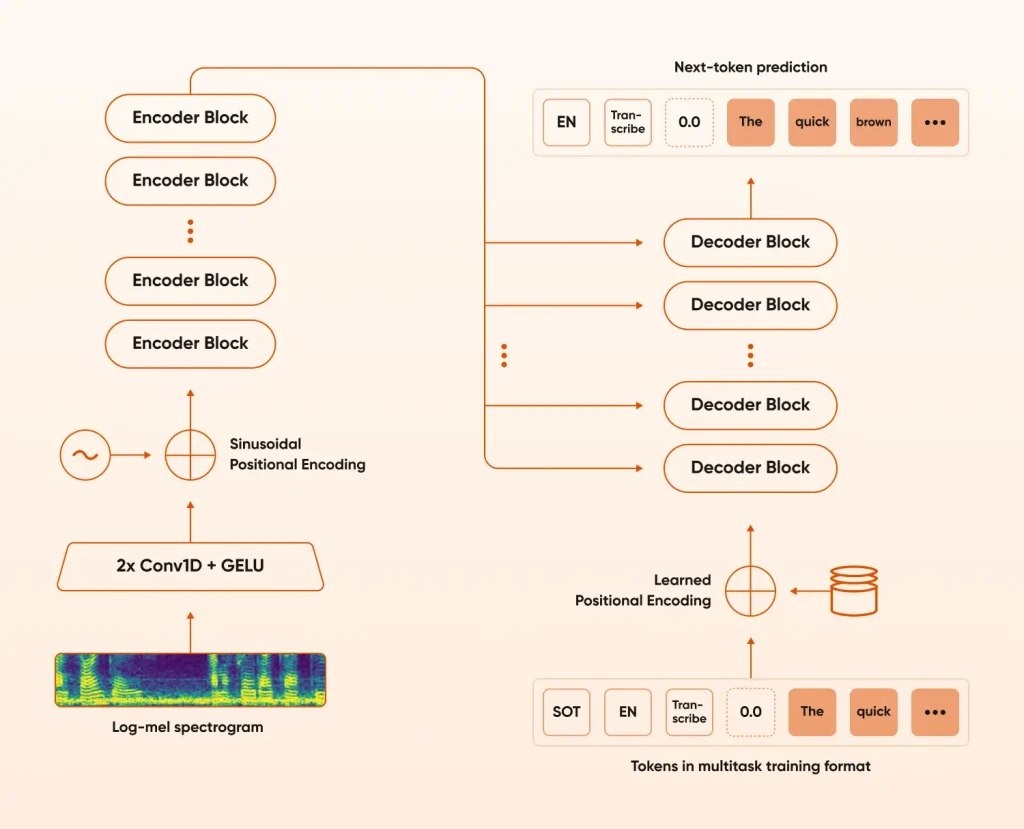

Understanding how Whisper AI call transcription for SaaS processes audio helps explain why it outperforms other SaaS customer call transcription tools. The system uses what’s called an encoder-decoder transformer architecture, which sounds complex but works in a surprisingly intuitive way.

Audio Preprocessing

The process begins with your audio input—this could be speech or any other sound. Instead of feeding raw audio directly into the neural network, Whisper first converts the waveform into a log-mel spectrogram. This spectrogram is a visual representation of the frequencies present in the audio over time, making it much easier for the model to extract meaningful features.

Feature Extraction

Once the spectrogram is generated, it passes through a two-layer convolutional neural network (ConvNet) with GELU activation functions. These layers help the model learn local patterns and structures within the audio, such as phonemes and syllables. To ensure the model understands the order of the input features, positional encoding is added at this stage.

Encoder

The output from the convolutional layers is then processed by a stack of transformer encoder blocks. These blocks are designed to capture both local and global context from the audio, allowing the model to understand not just individual sounds but also how they relate to each other across the entire audio sequence.

Decoder and Cross-Attention

After the encoder has distilled the audio into a rich set of features, these are passed to the decoder through a mechanism called cross-attention. The decoder can then “attend” to different parts of the encoded audio as it generates the transcription.

The decoder itself is another stack of transformer layers. It takes in a sequence of tokens (which represent words or subwords) and predicts the next token in the sequence. The decoder uses both the previously generated tokens and the encoder’s audio features to make its predictions. Positional encoding is also applied to these tokens to maintain their order.

Multilingual and Multitask Capabilities

One of Whisper’s standout features is its flexibility. The decoder can be prompted with special tokens that specify the language (such as English or Spanish) and the task (transcription or translation). This means the same model can transcribe speech in multiple languages or even translate spoken audio into English—all depending on the input tokens provided.

Text Output

The decoder continues generating tokens one by one until it outputs an end-of-sequence token, at which point the transcription (or translation) is complete.

Performance That Scales with Your Business

When evaluating Whisper API integration for SaaS, processing speed matters for operational efficiency:

- GPU deployment: 8-30 minutes to process one hour of audio

- CPU-only deployment: 15-60 minutes per hour of audio

For most SaaS operations, this means you can process a full day’s worth of customer calls overnight, with transcripts ready for analysis the next morning.

This makes Whisper ideal not just for customer support but also for companies that need to hire SaaS developers to analyze technical user feedback, bug reports, and API-related conversations during product onboarding.

Benefits of Using Whisper Automatic Speech Recognition for SaaS Applications

Let’s look at practical ways AI call transcription for customer service creates immediate value:

Automated Documentation

Instead of agents frantically typing notes during calls, Whisper captures every detail automatically. This means agents can focus entirely on solving customer problems, while ensuring nothing important gets lost in translation.

Quality Assurance at Scale

Rather than randomly sampling 5% of calls for quality review, you can analyze 100% of interactions. Search for specific keywords, track sentiment patterns, and identify coaching opportunities across your entire support operation.

Multilingual Customer Support

With Whisper’s native support for 50+ languages, your English-speaking support team can understand and respond to customers worldwide. The automatic translation feature means a customer calling in Spanish receives the same quality of service as English-speaking customers. This is why most companies prefer to use Whisper to transcribe calls.

CRM Integration That Actually Adds Value

Traditional CRM updates rely on agents remembering to input information correctly. With Whisper speech-to-text SaaS integration, customer records update automatically with:

- Detailed conversation summaries

- Identified action items and follow-ups

- Customer sentiment indicators

- Product feedback and feature requests

- Technical issues and resolution steps

This creates a complete customer journey map that actually reflects reality, not just what agents remembered to document. This kind of automation empowers teams that hire dedicated developers to focus on building better customer-centric features rather than sifting through scattered feedback.

Product Intelligence from Customer Conversations

Your customers tell you exactly what they need during support calls. Whisper makes this feedback actionable by automatically extracting:

- Feature requests and their frequency

- Pain points with current functionality

- Workarounds customers have developed

- Integration needs and use case variations

- Competitive comparisons and switching considerations

This intelligence feeds directly into product roadmap decisions, ensuring development efforts align with actual customer needs rather than assumptions.

Step-by-Step Whisper ASR Implementation Guide

This guide provides an overview of how SaaS companies can use OpenAI’s Whisper Automatic Speech Recognition (ASR) for transcribing audio to text, with clear explanations for each step.

1. Install Required Libraries

First, install the Whisper library. You can do this using pip:

bash

pip install git+https://github.com/openai/whisper.gitOr, if available:

bash

pip install whisperThis command downloads and installs Whisper and its dependencies so you can use it in your Python scripts.

2. Load the Whisper Model

OpenAI Whisper comes in several model sizes (tiny, base, small, medium, large). Larger models are more accurate but need more resources.

python

import whisper

model = whisper.load_model("base") # You can use "tiny", "small", "medium", or "large"This code imports the Whisper library and loads a pre-trained model into memory.

3. Transcribe an Audio File

Now use the loaded model to transcribe an audio file (for example, “audio.mp3”):

python

result = model.transcribe("audio.mp3")

print(result["text"])This reads your audio file, processes it, and prints the recognized text.

4. Use the Command Line (Optional)

If you prefer to not write the code, you can also use Whisper directly from the terminal:

bash

whisper audio.mp3 --model baseThis command transcribes “audio.mp3” using the base model and prints the result in your terminal.

5. Use Hugging Face Transformers Pipeline (Optional)

You can also use Whisper via the Hugging Face Transformers pipeline for a more streamlined approach:

python

from transformers import pipeline

transcriber = pipeline(model="openai/whisper-base")

result = transcriber("audio.mp3")

print(result)This provides an alternative way to run Whisper with minimal setup, and is especially useful if you’re already using Transformers for other tasks.

6. Real-Time or Batch Transcription (Optional)

- Batch: Transcribe multiple files at once by passing a list of filenames.

- Real-Time: Advanced users can use libraries like pyaudio to stream audio and process it in real time.

Key Points to Remember

- Supported Formats: Common formats like mp3, wav, m4a, etc. are supported.

- Model Choice: Larger models are more accurate but slower and require more memory.

- No API Key Needed: For open-source Whisper, you don’t need an API key—everything runs locally.

Translation: Whisper can also translate non-English audio to English with the right settings.

Summary Table

| Step | What You Do | Example Code/Command |

| Install | Install Whisper | pip install whisper |

| Load Model | Load a model | model = whisper.load_model(“base”) |

| Transcribe | Transcribe audio | model.transcribe(“audio.mp3”) |

| CLI | Use command line | whisper audio.mp3 –model base |

| HF Pipeline | Use Hugging Face pipeline | pipeline(model=”openai/whisper-base”) |

| Real-time | Real-time or batch transcription | Use pyaudio or pass file lists |

How to Plan Out Your Whisper ASR Rollout Strategy

Phase 1: Getting Started (Week 1-2)

Setting Up Your Whisper AI call transcription for SaaS Integration

The technical setup for Whisper API integration is straightforward, but planning makes the difference between a successful implementation and a frustrating experience.

Technical Requirements:

- Audio files in MP3, MP4, WAV, or M4A format

- Maximum file size of 25MB (longer calls need automatic chunking)

- API key from OpenAI

- Storage system for audio files and transcripts

- Integration endpoints for your existing business systems

Initial Testing Strategy: Start with 50-100 representative calls that reflect your typical audio quality and customer demographics. This baseline helps you understand accuracy rates and identify any domain-specific terminology that needs attention.

Phase 2: Integration Development (Weeks 3-4)

Building Your Processing Pipeline

The key to successful automated call transcription SaaS implementation lies in robust post-processing. Raw Whisper output is excellent, but business-ready transcripts require additional intelligence.

Essential Post-Processing Steps:

- Sentence segmentation and punctuation correction

- Speaker identification (using tools like Pyannote-audio)

- Custom terminology correction for your industry

- Sentiment scoring and keyword extraction

- Confidence scoring for quality assurance

Business System Integration: Connect your transcription pipeline to existing tools:

- CRM platforms for automatic record updates

- Help desk systems for ticket creation

- Analytics dashboards for management insights

- Compliance systems for regulatory requirements

Phase 3: Team Training and Rollout (Weeks 5-6)

Preparing Your Team

The most sophisticated technology fails without proper change management. Your support team needs to understand how transcription enhances their work rather than replacing their judgment.

Training Focus Areas:

- How to use transcripts for better customer service

- Quality assurance processes using transcription data

- Privacy and compliance considerations

- Feedback mechanisms for improving accuracy

Gradual Rollout Strategy:

- Start with internal calls and team meetings

- Expand to non-critical customer interactions

- Include more sensitive calls as confidence builds

- Implement human review processes for high-stakes conversations

Hire AI Developers from CMARIX To Handle Whisper’s Limitations

Just like any other tool or technology, Whisper is not without its limitations. It is a general-purpose speech transcription and translation model that needs to be fine-tuned as per your use case. If you are looking for professional AI development solutions to ensure accurate and smooth integration of Whisper in your business processes, consider partnering with CMARIX. Here are the challenges and their possible solutions of Whisper AI integration services:

Real-Time Processing Challenges

Whisper doesn’t support native real-time streaming, which limits some use cases. However, creative SaaS implementations work around this limitation:

Hybrid Approaches:

- Use faster ASR systems for real-time agent assistance

- Apply Whisper for post-call analysis and comprehensive documentation

- Implement near-real-time processing for calls under 30 seconds

Third-Party Solutions: Several services now offer real-time Whisper implementations, making live transcription increasingly viable for customer service applications.

Speaker Identification Solutions

Whisper doesn’t distinguish between multiple speakers natively, but integration with specialized tools solves this challenge:

Recommended Diarization Tools:

| Speech Darization Tool | Description | Best For |

| Pyannote-audio | An open-source Python library for speaker diarization and voice activity detection. It offers full customization and control for advanced AI workflows. | AI researchers, developers, and startups needing custom, flexible solutions. |

| AssemblyAI | A commercial API with built-in speaker diarization, sentiment analysis, and topic detection. Easy to integrate with minimal setup. | SaaS platforms and dev teams seeking fast, reliable, and scalable integration. |

| Rev AI | An enterprise-grade speech recognition service with strong accuracy, built-in diarization, and compliance features. | Enterprises in regulated industries that need high accuracy and auditability. |

The combination of Whisper’s transcription accuracy with dedicated diarization creates a complete solution for multi-party customer calls.

Managing Accuracy Expectations

While Whisper achieves 92% accuracy across general scenarios, certain conditions can affect performance:

Accuracy Optimization Tips:

- Normalize audio levels before processing

- Use noise reduction filters when possible

- Maintain custom vocabulary lists for industry terms

- Implement confidence scoring for quality control

- Plan human review for critical interactions

How Whisper Call AI Compares to Other Enterprise ASR Tools

| Feature / Provider | OpenAI Whisper | Google Speech-to-Text | Microsoft Azure Speech | AWS Transcribe |

| Real-Time Transcription | Not natively supported | Supported | Supported | Supported |

| Multilingual Support | 50+ languages with translation | 125+ languages | 90+ languages | Limited to major global languages |

| Speaker Diarization | Not built-in (requires external tools) | Built-in | Built-in | Built-in |

| Accuracy in Noisy Audio | High (trained on diverse audio) | Variable, depends on environment | Customizable models available | Lower in noisy environments |

| Custom Vocabulary/Model | Not supported | Supported | Extensive customization | Custom vocabulary support |

| Deployment Model | API (batch, async) or self-hosted | API (real-time and batch) | API (real-time and batch) | API (real-time and batch) |

| Best Use Case | SaaS teams needing multilingual, high-accuracy transcription | Real-time transcription at scale | Enterprise workflows on Microsoft stack | AWS-native apps with English support |

Whisper vs. Google Cloud Speech-to-Text vs Microsoft Azure Speech vs AWS Transcribe

When evaluating customer support transcription software options, consider these factors:

Google Cloud Speech-to-Text

- Strengths: Real-time streaming, speaker diarization, custom models

- Weaknesses: Higher cost, complex setup, requires ML expertise

- Best for: Large enterprises with dedicated AI teams

Microsoft Azure Speech

- Strengths: PII redaction, extensive customization, enterprise integration

- Weaknesses: Steep learning curve, premium pricing

- Best for: Microsoft-centric technology stacks

AWS Transcribe

- Strengths: Contact center analytics, call categorization, medical specialization

- Weaknesses: Limited multilingual support, AWS ecosystem dependency

- Best for: AWS-native applications with English-only requirements

OpenAI Whisper

- Strengths: Cost-effective, multilingual excellence, easy integration, high accuracy

- Weaknesses: Limited real-time support, requires additional tools for speaker separation

- Best for: SaaS companies prioritizing accuracy, cost efficiency, and multilingual support

ROI Analysis: Quantifying Business Impact

The financial benefits of implementing AI call transcription extend beyond obvious cost savings:

Direct Cost Reduction

- Eliminate 8-15 minutes of post-call administrative work per interaction

- Reduce quality assurance labor costs by 60-80%

- Decrease training time for new agents through better conversation examples

- Lower compliance risk through comprehensive documentation

Revenue Impact

- Improve customer retention through better issue tracking and resolution

- Accelerate product development with direct customer feedback analysis

- Enhance sales processes through conversation intelligence

- Increase upsell opportunities by identifying usage patterns and needs

Competitive Advantages

- Faster response times through better context understanding

- Proactive customer outreach based on conversation analysis

- Superior service quality through comprehensive interaction tracking

- Global customer support without language barriers

Whisper AI Call Transcription for SaaS Security Best Practices

Data Protection Best Practices

Implementing SaaS customer call transcription tools requires careful attention to privacy and security:

Technical Safeguards:

- Encrypt audio files in transit and at rest

- Implement automatic PII detection and redaction

- Maintain audit logs for all transcription activities

- Use secure API connections with proper authentication

Regulatory Compliance:

- GDPR compliance for European customers (data minimization, right to deletion)

- HIPAA considerations for healthcare SaaS applications

- Industry-specific requirements (financial services, education, legal)

- State and local privacy regulations

Customer Trust:

- Clear call recording notifications and consent processes

- Transparent data usage policies

- Customer access to their own conversation transcripts

- Option to opt-out of transcription services

Advanced Whisper AI Use Cases and Future Opportunities

Predictive Customer Intelligence

As your transcription database grows, advanced analytics become possible:

- Churn Prediction: Analyze conversation patterns to identify at-risk customers before they cancel. Specific language patterns, sentiment changes, and topic combinations often predict churn weeks in advance.

- Product-Market Fit Analysis: Track how customers describe your product, what alternatives they consider, and which features drive the most satisfaction or frustration.

- Competitive Intelligence: Understand how customers compare your solution to competitors, what drives switching decisions, and where you have sustainable advantages.

Integration with AI Development Solutions

Forward-thinking SaaS companies integrate call transcription with broader artificial intelligence software development initiatives to turn conversations into automated intelligence workflows and predictive tools.

- Custom Model Training: Use transcription data to train customer service chatbots and virtual assistants that understand your specific customer language and common issues.

- Automated Response Systems: Develop AI software development projects that can suggest responses to agents based on similar historical conversations and successful resolution patterns.

- Business Intelligence Automation: Create AI development solutions that automatically generate executive reports, trend analysis, and operational insights from customer conversation data.

How to Get Started with Whisper for SaaS Transcription

Week 1: Foundation and Assessment

Day 1-2: Current State Analysis

- Audit your existing call recording infrastructure

- Identify integration points with current systems

- Document current manual processes for call analysis

- Calculate baseline metrics for comparison

Day 3-4: Technical Preparation

- Set up OpenAI API access and test basic functionality

- Configure audio storage and processing infrastructure

- Test Whisper accuracy with sample calls from your environment

- Identify post-processing requirements

Day 5-7: Planning and Goal Setting

- Define success metrics and KPIs

- Select initial call types for pilot program

- Plan integration timeline and resource requirements

- Prepare change management strategy for your team

Week 2: Implementation and Testing

- Technical Integration: Build your processing pipeline with proper error handling, retry logic, and monitoring. Focus on reliability over speed during initial implementation.

- Quality Assurance: Test extensively with real customer calls, not just clean audio samples. Identify accuracy issues early and plan mitigation strategies.

- Team Preparation: Begin training key team members on new workflows and capabilities. Address concerns about AI replacing human judgment by emphasizing augmentation over replacement.

Week 3-4: Pilot Launch and Optimization

- Controlled Rollout: Start with less critical call types and gradually expand scope based on results and team confidence.

- Continuous Improvement: Gather feedback from agents and managers, measure against baseline metrics, and refine processes based on real-world usage.

- Scale Planning: Based on pilot results, develop plans for full production deployment and advanced feature implementation.

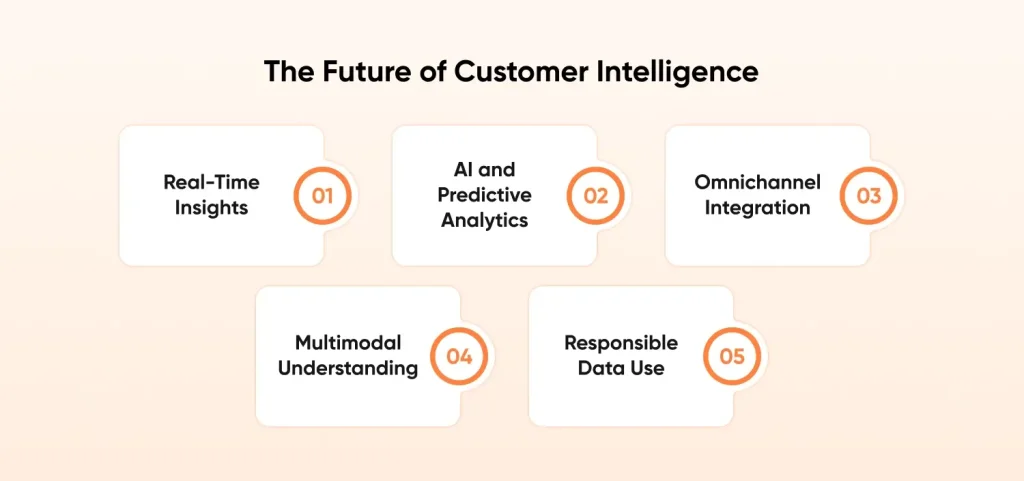

The Future of Customer Intelligence

Real-Time Insights

Businesses can now understand what customers are saying and feeling as interactions happen. This lets them respond right away, solve problems faster, and offer more personalized support.

AI and Predictive Analytics

With the help of AI, businesses can sift through huge amounts of data to find trends and predict what customers might need next. This allows them to be proactive—solving issues before they arise or spotting new opportunities early.

Omnichannel Integration

Today’s tools pull together data from phone calls, emails, social media, and other platforms to give a complete picture of the customer journey. This means customers get a consistent, seamless experience no matter how they reach out.

Multimodal Understanding

AI is getting better at picking up on more than just words—it’s learning to understand tone, visuals, and even video. This deeper level of understanding helps businesses better read emotions and fine-tune their responses.

Responsible Data Use

As companies collect more detailed customer insights, it’s more important than ever to handle that data responsibly. Being transparent and ethical with data builds trust—and that’s the foundation of strong, lasting customer relationships.

Companies implementing robust transcription capabilities today position themselves to leverage these advanced features as they become available.

Making the Decision: Is Whisper Right for Your SaaS?

Transcribe customer calls with Whisper if you:

- Handle customer calls in multiple languages

- Need cost-effective transcription for high call volumes

- Want high accuracy without extensive technical complexity

- Operate in environments with varying audio quality

- Seek integration flexibility with existing systems

- Prioritize customer privacy and data control

Whisper may not be the best fit if you:

- Require real-time transcription for live agent assistance

- Need built-in speaker diarization without additional tools

- Operate exclusively in controlled, high-quality audio environments

- Have regulatory requirements that prevent cloud-based processing

- Need extensive customization for highly specialized terminology

Final Words

Every time a customer picks up the phone, there is a chance to learn something valuable that could help your business grow. With tools like Whisper AI, SaaS companies can easily and affordably turn those conversations into written text. This makes it much easier to identify what customers enjoy, what they need, and where they might be running into problems.

Are you ready to transform your customer conversations into competitive intelligence? Start your Whisper AI implementation today and discover what your customers have been trying to tell you all along.

FAQs on Whisper AI Call Transcription for SaaS

Can Whisper be used for real-time streaming ASR?

Whisper wasn’t originally designed for real-time streaming but can be adapted with chunking techniques and optimized implementations. Projects like faster-whisper and WhisperLive enable near real-time performance, though with some latency trade-offs.

What Is the Difference between Streaming and Non-streaming ASR?

Streaming ASR processes audio continuously as it’s received, providing immediate partial results and low latency. Non-streaming ASR waits for complete audio input before processing, offering higher accuracy but requiring the full audio file upfront.

Does Whisper Work In Real Time?

Standard Whisper AI call transcription for SaaS has significant latency (several seconds) making true real-time difficult. However, optimized versions like faster-whisper with GPU acceleration and audio chunking can achieve near real-time performance for many applications.

What Are the Limitations of Whisper for Real-Time Applications?

Main limitations include processing latency (2-5 seconds), memory usage with larger models, and lack of native streaming support. GPU requirements for faster inference and potential accuracy drops when using smaller models for speed also pose challenges.

What Languages Does Whisper ASR Support?

Whisper supports many languages such as English, Spanish, French, German, Chinese, Japanese, Arabic, Hindi, and many others. It can automatically detect the input language or you can specify it explicitly for better performance and accuracy.