The integration of modern web applications with machine learning has transformed how users experience digital assets. Building a browser-based AI application is a great way to achieve universal accessibility, eliminating installation barriers, and enabling seamless updates. Modern browsers support advanced frameworks like TensorFlow.js and ONNX.js, making custom AI model integration into web apps possible directly in client environments without compromising performance or user experience.

Understanding Browser-Based Applications

Browser-based applications operate entirely within web browsers using standard technologies like HTML, CSS, and JavaScript. Unlike desktop applications requiring local installation, these applications run through web browsers and access device capabilities through modern web APIs. This approach enables cross-platform compatibility and immediate accessibility from any internet-connected device.

What Defines a Browser-Based App?

The main difference between browser-based apps and native applications comes down to where and how they run. Browser-based apps operate inside a web browser, which adds a layer of security by isolating them from your device’s core system, but it can also mean some performance trade-offs.

That said, newer technologies like WebAssembly and WebGPU are rapidly shrinking that gap, bringing near-native speed and performance to web apps.

With the rise of PWAs, browser-based applications can now offer features that feel a lot like native apps. You can install them on your home screen, use them offline, and enjoy smooth, app-like experiences, all from the browser. Together, these advances make web apps more powerful and user-friendly than ever before.

Key Characteristics of Browser-Based Applications:

- Written using HTML, CSS, and JavaScript

- Hosted on the web and accessible through browsers like Chrome, Firefox, and Safari

- Often responsive and mobile-friendly

Key Differences of Browser Based Apps vs Desktop and Native Apps

Browser-based applications offer immediate deployment and updates without user intervention, while native apps provide deeper system integration. Browser apps face memory constraints but benefit from universal compatibility. The trade-off between performance and accessibility defines most architectural decisions in browser AI development.

Examples of Browser-Based AI-Powered Apps

Popular examples demonstrate the practical viability of running complex AI operations in browsers. Here are a few real-world Browser-Based AI implementations from popular companies:

| Application | AI Features | Browser-Based Value |

| Canva | Design suggestions, background removal, image enhancement | Handles millions of design tasks directly in the browser |

| Photopea | Object detection, auto retouching, style transfer | Full-featured photo editor without installation |

| Photoshop Express | Auto-enhancement, noise reduction, background blur | Brings Photoshop-like tools to browser users |

| Figma | AI-assisted design, layout suggestions, content generation | Real-time collaboration and AI design tools in the browser |

| Remove.bg | Instant background removal using computer vision | One-click background editing in the browser |

| KREA | AI-powered generative design for visual content | Uses diffusion models directly in-browser |

| Uizard | Converts sketches to UI designs using computer vision and NLP | Rapid prototyping using AI from inside a browser |

| Runway ML (Web) | Text-to-video, background replacement, generative fill | Advanced media editing with web-first AI tools |

| Designify | Automated image enhancement, lighting, shadows | AI-driven photo enhancement without software downloads |

How to Plan the Development of Your AI-Powered Applications?

Successful AI application development begins with clearly identifying the specific problem your application will solve. Whether automating image recognition, providing natural language processing, or enabling predictive analytics, defining your core use case shapes every subsequent development decision.

Identifying the Problem Your App Will Solve

Consider user pain points that AI can address more effectively than traditional approaches. Define clear success metrics for your AI features to measure effectiveness post-launch. Research existing solutions to identify gaps your custom AI model integration can fill uniquely.

You can ask questions such as:

- What manual or repetitive tasks could be automated to save users time?

- Where do users often struggle or drop off during their workflow?

- Are there patterns or predictions users need that are hard to detect without AI?

- What types of decisions do users make that could be improved with intelligent recommendations?

For instance, if you’re building a real-time face mask detector, the goal is to identify whether someone is wearing a mask using webcam input.

Defining User Flows and Data Inputs for AI

Map out the user flows and data inputs for your AI system. Define how users will input data, what preprocessing steps are required, and how AI outputs will be presented. This planning phase prevents costly architectural changes during development and ensures smooth integration between human interaction and machine intelligence.

You can ask questions such as:

- Will users upload images?

- Will the model run in real time (e.g., webcam, voice input)?

- What output do you need to show (text, image, action)?

Pro Tip: You can make use of flowchart tools such as Draw.io and Whimsical to visualize your app logic.

Choosing Between Client-Side and Server-Side Processing

| Aspect | Client-Side Processing | Server-Side Processing |

| Performance | Limited by device/browser capabilities | High computational power available |

| Latency | Low (instant response, no network delay) | Higher (due to network round-trips) |

| Privacy | Data stays on user’s device | Data must be sent to server |

| Cost | Lower operational costs (no server inference load) | Higher server and infrastructure costs |

| Model Size Limits | Constrained by browser memory | Can handle larger, more complex models |

| Best Use Cases | Lightweight, real-time interactions | Heavy processing, large-scale inference |

The choice between client-side and server-side AI processing significantly impacts user experience and costs. Client-side processing offers privacy benefits and reduced server costs but faces browser memory limitations. Server-side processing offers greater computational power, but it can introduce latency and raise privacy concerns.

Many successful apps use a hybrid approach, combining local processing for quick, real-time responses with server-side computing for more complex tasks. When deciding on the right balance, it’s important to consider the capabilities of your users’ devices and the nature of your application.

Pro Tip: Opt for professional AI software development services as they may advise you to start with server-side processing for complex models, then optimize critical features for client-side execution as your application matures and browser capabilities improve.

Build AI-powered Web App Choosing the Right Technology Stack

Selecting the appropriate technology stack forms the foundation to develop a web app with machine learning capabilities. Frontend frameworks should align with AI integration requirements and team expertise. Backend technologies must support AI model serving efficiently, while model integration tools determine deployment flexibility and performance characteristics.

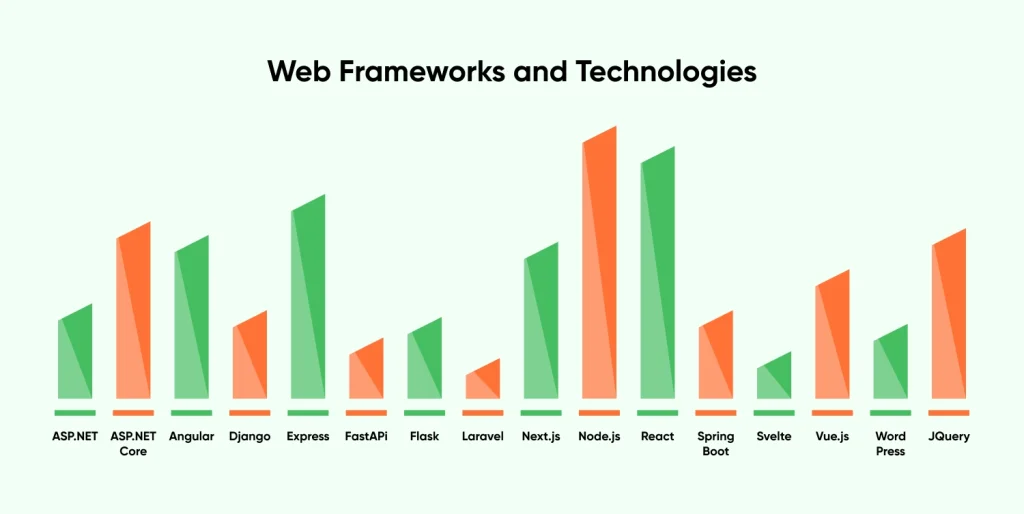

React dominates with 45% market share in web frameworks and technologies, offering extensive AI-focused libraries. Python remains preferred for AI development, while TensorFlow.js enables direct browser model deployment.

Here is a list of best technologies for integrating AI in web development:

Frontend Technologies

Your frontend is the user-facing layer that runs in the browser. Here are the most common choices:

- HTML5 & CSS3 – For structure and styling

- JavaScript – The core language of web interactivity

- Frameworks:

- React.js – Powerful for building scalable UIs

- Vue.js – Lightweight and easy to adopt

- Svelte – Great for building high-performance apps with less boilerplate)

These frameworks are popularly used to integrate AI into an app with AI libraries like TensorFlow.js and ONNX.js.

Backend Technologies

Even if you deploy AI model in browser, you may still need backend support for data logging, user authentication, or dynamic content.

- Node.js – JavaScript-based runtime for building fast and scalable server-side logic

- Python (Flask or Django) – Ideal to run AI model in browser via APIs

- Flask: Lightweight and modular

- Django: Full-featured and secure

AI and Model Integration Tools

Here are some browser-compatible AI libraries:

- TensorFlow.js – Load, train, and run models entirely in the browser using JavaScript

- ONNX.js – Use ONNX models trained in PyTorch or TensorFlow

- WebAssembly (WASM) – Helps run compiled Python/C++ ML code directly in the browser

Training or Importing a Custom AI Model

AI model development requires careful consideration of training approaches and deployment formats. Teams can either train models from scratch using frameworks like TensorFlow and PyTorch, or leverage pre-trained models through fine-tuning approaches.

TensorFlow offers comprehensive tools for building custom models with excellent web deployment support. PyTorch provides intuitive development experiences but requires conversion for browser deployment. Pre-trained models from sources like Hugging Face significantly reduce development time while maintaining high accuracy for common use cases.

Model export to web-compatible formats involves conversion tools like tensorflowjs_converter for TensorFlow models or ONNX format for cross-platform compatibility. These tools optimize models for browser execution through weight quantization and graph optimization techniques.

Pro Tip: Hire AI developers from a trusted and capable AI software development company with developer profiles in various technologies such as Python, Java, Node.js and others, so you have options to choose the base tech-stack of your AI based browser application.

1. Training a Model

If you need a custom model, you can train it using frameworks like:

- TensorFlow – Ideal for image, voice, or text-based models

- PyTorch – Great for flexibility in model design

You’ll collect labeled data, define a model architecture, train the model, and evaluate it.

2. Fine-Tuning Pretrained Models

If you’re limited in data or compute, start with models like:

- MobileNet (for image classification)

- BERT (for natural language tasks)

These can be fine-tuned on your custom dataset and exported to browser-compatible formats.

3. Exporting the Model

Once trained, you must convert the model to a format suitable for web use:

- TensorFlow.js format (.json + binary weight files)

- Use tensorflowjs_converter to convert .h5 or .pb to browser-ready format

- ONNX format (.onnx) for compatibility with ONNX.js

- Optimize using quantization to reduce file size and load time

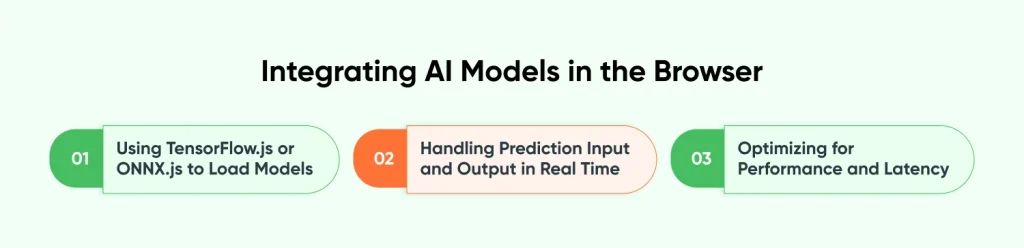

Integrating AI Models in the Browser

Browser AI integration involves loading models asynchronously to prevent main thread blocking. TensorFlow.js enables direct model loading with GPU acceleration support, while ONNX.js provides broad format compatibility with efficient WebGL backends for improved performance.

Using TensorFlow.js or ONNX.js to Load Models

Make sure to load models with proper error handling and clear loading indicators so users know what’s happening during initialization. This helps manage expectations and improves the overall experience.

Keep an eye on memory usage, especially if you’re loading multiple models. On devices with limited resources, this can quickly lead to crashes.

Also, be sure to implement model disposal methods to free up memory when models are no longer needed. Efficient resource management is key to keeping your app stable and responsive.

Handling Prediction Input and Output in Real Time

Implement robust input validation and preprocessing to ensure model inputs match training data formats. Design clear output presentation that communicates AI predictions effectively, including confidence scores when appropriate. Use debouncing for real-time predictions to avoid overwhelming models with rapid input changes.

Optimizing for Performance and Latency

Use Web Workers to run AI tasks on separate threads so the UI stays smooth and responsive. Cache predictions for repeated inputs to save time and reduce workload. For large models, load them in parts to speed up the initial load. Also, add settings that adjust model quality based on the user’s device and internet speed. This helps your app work well for everyone, no matter what they’re using.

Pro Tip: Add adaptive quality settings that adjust model complexity based on the user’s device and network conditions. This helps deliver optimal performance across a wide range of environments.

Hosting and Serving Your Model

Where to host your model may seem like an irrelevant or not as important decision, but it does require some careful planning. Firstly, how to host your model will mainly depend on the size of the model. Next, you need to consider what are your primary goals – is it to make an AI powered browser application that runs fast? Do you prioritize privacy?

Smaller models under 10 MB can be deployed directly in the frontend, but for large models with higher computation requirements, server-side deployment using edge computing or API may prove to be a better choice.

Hosting Small Models in the Frontend with TensorFlow.js

Frontend model hosting eliminates server dependencies and reduces operational costs. Distribute model files through CDNs to minimize global loading times and configure proper caching headers. This approach works best for lightweight models that fit within browser memory constraints.

Serving Large Models via APIs Using Flask or FastAPI

Create RESTful APIs using Flask or FastAPI for models exceeding browser limitations. Implement auto-scaling solutions to handle varying inference loads. Container orchestration platforms like Kubernetes excel at managing AI model serving infrastructure with proper load balancing.

Using CDN or Edge Computing for Performance

Edge computing reduces latency by processing AI requests closer to users. Services like Cloudflare Workers and AWS Lambda@Edge enable global AI model deployment with minimal infrastructure management. This approach particularly benefits browser use AI agent applications requiring real-time responses.

Pro Tip: Use edge computing for lightweight operations while keeping complex models on centralized servers to balance performance and cost efficiency effectively.

User Interface Considerations

The user interface is a crucial part of successful implementation of AI-powered applications. The UI should navigate the users to the most important sections, helping them understand AI processing and features.

Input Handling

- Use webcam, drag-and-drop, or form inputs depending on the model type

- Validate user inputs before sending to the model

- Use progress indicators during prediction to enhance UX

Example: MediaPipe offers real-time input handling for webcam-based AI models.

Displaying Predictions

- Show results in real-time with clear visuals (e.g., image overlays, graphs, text boxes)

- Display prediction confidence using percentages or color codes

- Offer user feedback on why a prediction was made, when possible

Accessibility and Responsiveness

- Make sure the UI works smoothly on desktop and mobile browsers.

- Add screen reader support with proper ARIA labels.

- Avoid heavy animations or effects that could interfere with AI inference timing.

Browser-Based AI Applications: Security and Privacy Best Practices

AI application security requires comprehensive approaches covering data privacy, endpoint protection, and regulatory compliance. Process sensitive data locally when possible to maintain user privacy and eliminate data transmission concerns.

Keeping User Data Local for Privacy

Client-side AI processing complies with strict privacy regulations and reduces server costs. Collect only necessary data for AI operations and implement data retention policies that automatically delete processed data after specified periods. This approach particularly benefits applications handling sensitive personal information. You can find more security best practices in OWASP ai decurity guide.

Securing API Endpoints and Model Files

Opt for a web app development company that knows how to implement robust authentication for AI API endpoints using API keys, JWT tokens, or OAuth2 depending on security requirements. Protect proprietary AI models through obfuscation, access controls, and monitoring. Consider server-side deployment for highly sensitive or valuable models.

Complying with Data Protection Regulations (GDPR, CCPA)

It is important to make sure your AI-powered application follows data protection laws, with proper consent management, data deletion policies, and transparency in privacy policies.

Pro Tip: Undertake privacy impact assessments before deploying AI features, especially when processing personal or sensitive data, to ensure comprehensive compliance coverage.

Testing and Debugging the Application

AI application testing should cover various aspects such as model performance, integration reliability, and user experience validation. Implement automated testing for model accuracy, precision, and recall metrics against known datasets.

Test AI behavior with unusual, corrupted, or edge-case inputs to ensure robust error handling that prevents application crashes. Test API communication between frontend and backend components, verifying proper error handling and data serialization.

Here are the different kind of testing you should conduct to securely build browser-based applications:

- Unit Testing for AI Logic

- Test the behavior of your model in isolation

- Verify inputs and outputs using test datasets

- Use tools like Jest or Mocha for JavaScript-based testing

- Integration Testing

- Validate the complete flow from user input to AI prediction output

- Test across different browsers (Chrome, Firefox, Safari, Edge)

- Simulate user interaction using tools like Cypress

- Real User Testing

- Observe users interacting with your AI tool

- Identify usability issues or unexpected behavior

- Use analytics tools like Hotjar or Google Analytics to understand usage patterns

- Debugging Tips

- Use browser dev tools (console, network tab) for error inspection

- Log key steps of the AI prediction pipeline

- Monitor model loading and prediction times to catch performance issues

Quick Performance Optimization Tips

- Model Quantization: Reduce model size by converting 32-bit weights to 8-bit or 16-bit representations, improving loading times with minimal accuracy loss

- Progressive Loading: Load model components on-demand rather than all at once to improve initial application loading times and reduce memory consumption

- Prediction Caching: Cache model predictions for repeated inputs and implement browser storage for frequently used models

- Web Workers: Use separate threads for AI inference to prevent UI blocking during computation

- Adaptive Model Selection: Serve lightweight models to resource-constrained devices automatically based on device capabilities

- Memory Management: Monitor browser memory usage and implement model disposal methods to prevent crashes

- Browser Compatibility: Detect AI capabilities before model loading and provide fallback functionality for unsupported browsers

- CDN Distribution: Use content delivery networks for global model file distribution to minimize loading times

- Lazy Loading: Implement progressive model loading strategies that prioritize essential components first

- Performance Monitoring: Track inference times and optimize based on real user performance data

Deployment and CI/CD for AI Applications

Automate deployment using GitHub Actions workflows that handle model conversion, testing, and deployment with automated model validation. Leverage platform-specific features of Netlify and Vercel for AI application deployment, as both platforms offer excellent support for static sites and API functions.

Implement version control for AI models alongside code versioning, tracking performance metrics across versions to facilitate rollback decisions. Deploy new models using blue-green strategies to minimize downtime and enable quick rollbacks.

Implement pipelines that retrain models based on new data or performance degradation. Integrate model performance monitoring with deployment pipelines to trigger retraining or rollbacks based on performance thresholds automatically.

Pro Tip: Implement canary deployments for AI model updates, gradually increasing traffic to new models while monitoring performance metrics to ensure stability.

Real-World Use Cases and Examples

AI-Based Image Editing Tools

Browser-based image editors like Photopea and Canva demonstrate successful AI integration for automatic background removal, style transfer, and image enhancement. These applications process millions of images monthly while maintaining responsive user experiences through optimized model deployment and efficient resource management.

Advanced features include real-time filters, intelligent cropping suggestions, and automated photo corrections that run entirely in browsers. These tools prove that sophisticated computer vision models can operate effectively in web environments without compromising quality or performance.

Popular AI-Based Image Editing Tools Include:

- Remove.bg – capable of removing image backgrounds with deep learning capabilities directly in the browser.

- Fotor – provides intelligent enhancements with facial retouching and background blur.

Natural Language Chatbots in the Browser

Nowadays, modern browsers are leveraging AI agents to offer customer support, generate content, and provide interactive help right within the browser. Companies like Intercom and Drift have built chatbots that can handle complex conversations smoothly—no plugins or downloads needed. This really shows how effective custom NLP models can be in web environments.

These AI-powered tools demonstrate impressive real-time language processing, understanding context, and managing back-and-forth conversations that rival traditional native apps. Since they use on-device AI or local AI capabilities, they are able to integrate seamlessly with existing web workflows while still delivering top-notch performance.

These implementations showcase real-time language processing, contextual understanding, and multi-turn conversations that rival native applications. The browser use agent applications provide seamless integration with existing web workflows while maintaining high performance standards.

Real-Time Object Detection from Webcam Feed

Applications using webcam feeds for real-time object detection showcase browser AI capabilities through accessibility tools, security systems, and augmented reality experiences that run entirely in web browsers without requiring additional software installation.

Examples include:

- Pose detection for fitness applications

- Gesture recognition for interactive presentations

- Safety monitoring systems that process video streams in real-time.

These applications demonstrate the practical implementation of computer vision models in browser environments.

Future Trends in Browser-Based AI Apps

WebGPU and Acceleration APIs

WebGPU promises significant performance improvements for AI workloads in browsers, with early implementations showing 3-5x performance gains for compatible models. This technology expands possibilities for complex AI applications and enables more sophisticated browser use agent applications with desktop-class performance capabilities.

Federated Learning and On-Device Training

Modern AI browser capabilities are enabling collaborative model training. Developers don’t even need to centralize sensitive data. This approach addresses privacy concerns while improving model performance through diverse training data, making it particularly valuable for privacy-sensitive applications that require personalized AI experiences.

Improvements in Client-Side AI Capabilities

Browser manufacturers continue investing in AI-specific optimizations, with future browsers likely including dedicated AI processing units and optimized inference engines. These improvements will make browser use agent applications more powerful and efficient while reducing dependence on server-side processing.

Advanced Web Standards Integration

Web standards are likely to involve more integration capabilities for AI-powered applications. This will improve hardware acceleration, provide expanded storage options and improve specific AI security features. hire web developers that are well-prepared and aware of such trends in the market for your next project.

Final Words

Building a browser-based AI application requires thorough planning, appropriate tech stack selection, and thorough testing. Start with simple AI features and gradually increase complexity as you gain experience. The combination of modern web technologies and AI frameworks makes sophisticated applications possible while maintaining web accessibility advantages and cross-platform compatibility.

FAQs on Building Browser-Based AI Applications

What is a browser-based AI Application?

A browser-based AI application runs entirely within a web browser without requiring server-side processing or separate software installation. These apps leverage JavaScript frameworks and WebAssembly to execute AI models directly on the user’s device, performing tasks like image recognition or text analysis using the browser’s computational resources.

How to Run Custom AI Model in Browser?

You can run custom AI models using TensorFlow.js, ONNX.js, or WebAssembly implementations that convert trained models into browser-compatible formats. The models load as JavaScript modules and execute using the browser’s CPU or WebGL for GPU acceleration, enabling real-time inference without external servers.

Which Technologies Can I Use to Build a Browser-Based AI App?

Key technologies include TensorFlow.js for machine learning, WebAssembly for high-performance computing, and WebGL for GPU acceleration. Frontend frameworks like React or Vue handle the UI, while Web Workers manage heavy computations without blocking the main thread.

What Are Some Examples of Browser-Based AI Applications?

Examples include Google’s Teachable Machine for training custom models, RunwayML’s browser editor for creative AI, and social media face filter applications. Photo editing tools use AI for background removal, while coding assistants provide real-time code suggestions entirely in the browser.

Can You Build a Web App With AI?

It is possible to build web applications with integrated AI capabilities using modern web technologies. These apps handle complex tasks like natural language processing, computer vision, and predictive analytics entirely client-side, combining JavaScript AI libraries with responsive design frameworks for cross-platform compatibility.