AI is not a concept of the future; we are in this data-driven era, where we experience and use countless AI-powered applications daily. With such dependency and integration of AI services in our social media apps, generative AI solutions like ChatGPT, Claude.ai, and others, and more such use cases, we need to remain more vigilant about the significant privacy concerns such promising technologies bring.

Recent data breaches on credible platforms like Facebook by Meta and other incidents have exposed millions of personal records in the recent past. AI systems are also trained on our sensitive information, which helps them understand our behavior and identity. This requires a new school of thought – privacy-first AI.

AI implementation requires companies to follow and adhere to many AI privacy compliances like HIPAA, GDPR, and others, based on industry and country of operation. A violation of GDPR can attract up to €20 million, highlighting the need and importance of building privacy-first AI systems.

What is Privacy-First AI?

To address modern-day security concerns, it is important to implement responsible AI best practices, through a new school of thought – Privacy-First AI. It shifts the focus from implementing security at the end of an AI project, to building AI applications keeping privacy at the centre of all decisions.

Unlike the traditional approach of collecting massive datasets in centralized data warehouses, this approach focuses on prioritizing user privacy with the help of AI implementation best practices and architecture. This philosophy is extended throughout the entire AI lifecycle.

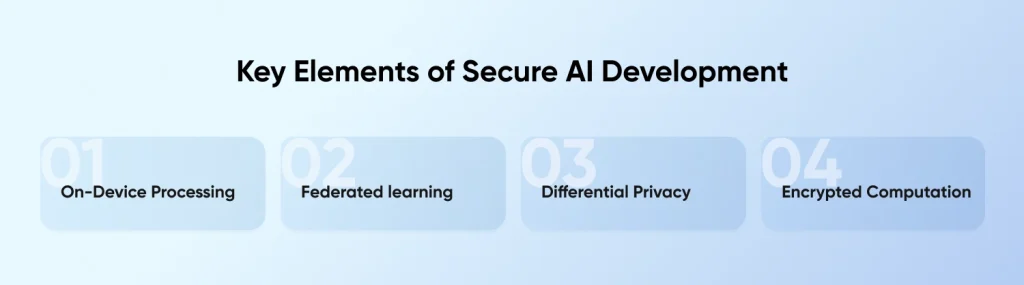

Key Elements of Secure AI Development

On-device Processing

On-device generative AI solutions move the computation tasks to the user’s device rather than the cloud servers. While this increases the processing task load on the user device, it ensures sensitive information remains local, reducing exposure risks.

Federated learning

Federated learning is one of the secure AI surveillance software development best practices that enables model training across a distributed network of devices without needing to centralize raw data. The model updates travel to the user servers without passing down the underlying information.

Differential Privacy

Differential privacy is a method of adding calibrated noise to the data or model outputs using secure AI practices. This prevents the identification of individuals without affecting the statistical utility of the dataset.

Encrypted Computation

This technique allows developers to process encrypted data without having to decrypt it first, which makes the data less vulnerable at any stage of its processing, maintaining privacy throughout the analysis.

What is the Difference Between traditional AI vs Privacy-First AI?

| Aspect | Traditional AI | Privacy-First AI |

| Data Storage | Centralized repositories | Distributed or on-device |

| Training Location | Cloud servers | Edge devices or federated |

| Data Access | Full access to raw data | Limited or anonymized access |

| Privacy Controls | Often added later | Built-in by design |

| Regulatory Risk | Higher compliance burden | Compliant AI |

| Deployment Model | Cloud-dependent | Works offline or with minimal connectivity |

| User Trust | Variable, often lower | Typically higher |

Why Privacy Matters in AI Development?

Privacy concerns in AI development go beyond theoretical risks. Major credible tech companies that faced billions in fines for failing to handle and maintain user data security. According to BM, the average data breach costs organizations around $4.88 million USD.

AI systems present unique privacy challenges due to their ability to:

- Infer sensitive attributes not explicitly collected

- Memorize training data that might contain personal information

- Generate outputs that inadvertently reveal private details

- Create detailed profiles through seemingly innocent data points

Regulatory Environment

A complex web of regulations governs AI and data privacy globally:

- GDPR establishes strict requirements for processing personal data in the EU, including the right to explanation for automated decisions.

- CCPA/CPRA gives California residents control over their personal information and places obligations on businesses handling this data.

- HIPAA regulates protected health information in the US, with specific requirements for AI systems processing medical data.

- AI-specific regulations are emerging worldwide, including the EU AI Act, which categorizes AI systems by risk level and imposes stricter requirements on high-risk applications.

Trust and User Perception

Privacy directly impacts user trust and adoption of AI systems. According to Pew Research, 81% Americans mention feeling that they have little control over the data companies collect.

Organizations championing privacy-first AI often gain competitive advantages:

- Higher user engagement and retention

- Reduced abandonment rates during onboarding

- Ability to operate in privacy-sensitive industries

- Differentiation in crowded markets

Core Principles of Privacy-First AI Architecture

Implementing privacy-first AI requires adherence to several key architectural principles that guide development decisions from inception through deployment.

Data Minimization and Purpose Limitation

Data minimization is one of the most effective secure AI development services. The idea of collecting only the necessary data and filtering out sensitive data from the start eliminates the chances of that filtered data getting leaked in the first place.

How to set up effective data minimization best practices:

- Collect only the necessary data needed for the specific AI function

- Establish clear retention policies with automatic deletion

- Conduct regular data store audits to remove unnecessary information

- Use synthetic data for developing and testing whenever possible

Purpose limitation is a great way to extend this concept by restricting data use to the pre-decided purposes. This prevents function creep, where collected data is being processed and used for undisclosed purposes. This entire ideology focuses on collecting accurate data rather than accumulating excessive data.

On-Device AI Applications and Edge AI Security

On-device inference is the safest way to keep sensitive data limited to local servers by running AI models directly on the user devices. This approach provides many benefits, such as:

- Reducing transmission risks of sensing data to servers

- Provides offline AI functionalities without depending on cloud servers

- Delivers low latency for time-sensitive applications

- Reduces cloud computing costs and digital carbon footprint

AI on edge devices provides a way to extend these benefits to many devices with dedicated AI libraries like TensorFlow Lite and CoreML. They optimize models for resource-constrained environments with privacy-AI techniques like Quantization, Pruning, Knowledge distillation, and hardware-specific optimizations.

Google’s GBoard predictive text and Apple’s Face ID showcase how on-device AI or local AI applications can run entirely on-device without affecting performance or user privacy.

Anonymization and Differential Privacy

Data anonymization helps prevent individual identification within datasets. This goes beyond removing direct identifiers like addresses or names. Implementing trained AI models with adequate anonymization includes best practices such as:

- Quasi-identifiers that could enable re-identification when combined

- Dataset context and external information that might allow deanonymization

- Statistical disclosure risk through outliers or unique combinations

Differential privacy is a mathematical algorithm that guarantees anonymization with calibrated noise to data or query results. This is known as the “privacy budget” or the “epsilon value,” which controls the privacy-utility tradeoff.

How to Ensure Secure AI Development?

To develop truly privacy-first AI applications, you need access to skilled AI developers with experience providing secure AI software development services. They should follow a security-driven approach throughout the AI implementation lifecycle. Here are some promising strategies to do so:

Security-by-Design in AI Workflows

Integrating security in your AI workflows from the start shifts how security mechanisms are considered in AI MVP development services and full-fledged AI projects. Instead of worrying about and implementing security protocols as a final step, this approach integrates protective measures from the start of each development phase.

From the initial architecture decisions to final deployment and monitoring, security-by-design provides a strong foundation for privacy-first AI. This preventive strategy reduces vulnerabilities compared to reactive measures.

Role of MLOps in Privacy Assurance

Building a privacy-focused MLOps pipeline is essential by implementing data classification systems that can tag and flag sensitive information. By using tools like MLflow or DVC (Data Version Control), you can establish automated privacy checks for AI model trading and deployment using differential privacy libraries and output filtering.

Use professional AI consulting services to design and code smart compliance dashboards. They provide real-time visibility into privacy metrics and potential violations. Ensure the team can set up automated data retention policies, systematically removing unnecessary information. Configure your CI/CD pipeline to block deployments that fail to match privacy requirements, treating privacy violations similarly to how you prioritise solving functional bugs.

Encryption and Secure Data Pipelines

Map out the entire data flow and identify privacy vulnerabilities at each stage. Implement TLS 1.3 standards for all data transit with certificate pinning for sensitive connections. Make use of envelope encryption with regular key rotation for stored data.

Challenges in Implementing Privacy-Preserving AI

- Accuracy vs. Privacy Tradeoff: Differential privacy may reduce model performance. Solve with fine-tuning and privacy budget calibration.

- Resource Constraints on Devices: On-device processing can be limited. Use lightweight models (e.g., quantized models, TinyML).

- Complexity in Federated Workflows: Federated systems are more complicated to orchestrate. Mitigate with frameworks like TFF, Flower, and secure aggregation protocols.

- Explainability vs. Security: Making models interpretable can expose sensitive logic. Balance transparency with abstraction.

Tools and Frameworks for Privacy-First AI

Several specialized tools and frameworks support privacy-first AI development, making implementation more accessible without requiring deep cryptography or distributed systems expertise. Hire AI developers with professional expertise in using AI tools like:

| Framework / Library | Key Capabilities & Use Cases |

| PySyft (OpenMined) | Extends PyTorch/TensorFlow for federated learning, differential privacy, and encrypted computation. Works with PyGrid for multi-org orchestration and PyDP for DP APIs. |

| Flower | Framework-agnostic federated learning library with a client-centric architecture. Scales from research to production across diverse environments. |

| TensorFlow Federated | Offers secure aggregation and high- & low-level APIs for federated learning on TensorFlow. Suitable for both quick deployment and custom research algorithms. |

| TensorFlow Lite + Edge TPU | On-device AI using model optimization (quantization, pruning). Delivers low-latency, privacy-preserving inference with Edge TPU acceleration. |

| Microsoft SEAL | Enables computation directly on encrypted data using homomorphic encryption. Ideal for outsourcing secure data processing without compromising privacy. |

OpenMined/PySyft

OpenMined’s PySyft extends PyTorch and TensorFlow with privacy-preserving capabilities, enabling federated learning, differential privacy, and encrypted computation within familiar ML frameworks. The ecosystem includes PyGrid for orchestrating federated learning across organizational boundaries and PyDP for implementing Google’s differential privacy algorithms with simple Python APIs.

Flower

Flower provides a lightweight yet powerful federated learning framework for flexibility across diverse ML frameworks and deployment environments. Its client-centric architecture allows integration with existing ML pipelines regardless of framework choice, while its scalable server implementation supports everything from research prototypes to production deployments.

TensorFlow Federated

TensorFlow Federated (TFF) offers production-ready federated learning with built-in secure aggregation protocols that protect individual contributions. TFF provides high-level APIs for common federated learning patterns and low-level APIs for custom algorithm development, making it suitable for quick implementations and advanced research.

TensorFlow Lite/Edge TPU

TensorFlow Lite optimizes models for on-device deployment with tools for quantization, pruning, and compression while maintaining accuracy. When paired with Edge TPU hardware accelerators, these optimized models deliver privacy-preserving inference with performance comparable to cloud-based alternatives, enabling sophisticated AI capabilities without data leaving the device.

Microsoft SEAL

This tool by Microsoft enables developers to process encrypted data without having to decrypt it first. Such breakthrough technology enables organizations to outsource sensitive data processing to untrusted environments while maintaining cryptographic privacy guarantees, opening new possibilities for secure multi-party computation.

Future of AI Security: Decentralized and Privacy-Centric

The future of AI development increasingly points toward decentralized, privacy-centric architectures.

Confidential Computing

One of the emerging trends in AI data protection is turning to confidential computing environments that provide hardware-based trusted execution environments.

Zero-Knowledge Proofs

Zero-knowledge proof provides systems with a way to verify data, without disclosing underlying data. This makes identity verification safer since no actual documents or data needs to be shared.

Privacy as a Competitive Advantage

Customers are more conscious about trusting companies with their private and sensitive information. Companies that are able to establish themselves as a security-first company, will naturally benefit from their competitors, with a better trust factor.

Final Words

Building privacy-first AI represents both a technical obstacle and an ethical need. By adapting secure AI principles like data minimization, on-device processing and other technologies, businesses can provide robust AI applications, when respecting user privacy.

The approaches outlined in this guide offer practical pathways to developing AI systems that comply with regulations, build user trust, and prepare for growing demands of secure AI application development.

FAQs on Privacy-First AI Software Development

What are some key techniques for building Privacy-First AI applications?

Key techniques include data minimization, differential privacy, federated learning, anonymization, and secure multi-party computation. These help limit data exposure while enabling practical AI model training.

How do data privacy regulations like GDPR and CCPA impact AI development?

Regulations like GDPR and CCPA require AI systems to ensure transparency, user consent, and data protection. They also mandate explainability and restrict how personal data is collected, processed, and stored.

What are the challenges in building Privacy-First AI applications?

The challenges include the limited availability of rich datasets, maintaining model performance with anonymized data, high upfront implementation costs, and more.

What are the benefits of On-Device AI for privacy?

On-device AI processes data locally, reducing reliance on cloud storage and minimizing data leakage risks. It enhances privacy, speeds up response times, and supports offline functionality.