In today’s fast-moving, AI-powered era, autonomous agents are playing a bigger role than ever. They are helping businesses run smoother and making decisions affecting millions of lives every day. While these systems are designed to make our lives easier and unlock new opportunities, we can’t get carried away—we need to implement proper AI Agent Evaluation frameworks and best practices to ensure these systems actually work as intended and follow ethical AI principles.

Today we’ll explore what Agentic AI evaluation really means, and how these frameworks go beyond traditional AI testing methods to assess how AI agents make decisions, ensure they align with ethical principles, and perform effectively in real-world conditions.

The Hidden Challenge of Using AI Agents Evaluation

Agentic AI systems work with a level of independence we haven’t witnessed before. They’re not just processing data—they’re reasoning, planning, and acting in ways that can shape entire business ecosystems. Because of this, organizations need stronger evaluation systems to ensure these agents behave responsibly, reliably, and in alignment with both regulatory expectations and organizational goals.

Recent studies show that 70-85% of AI deployment fails due to weak or insufficient attention towards the evaluation process during development. Agentic AI frameworks are designed for closing this gap, providing ways to be more thorough with the validation and testing before these agents get deployed in real-world settings.

Traditional AI Testing vs Dynamic AI Agents

Traditional AI evaluation worked great for previous AI models and capabilities. It is built around static tests and benchmarks. It measures how a model can predict or classify using a pre-set dataset. This is ideal for basic tasks, but it won’t show the true impact or accuracy that is expected out of autonomous, reasoning AI agents.

| Evaluation Aspect | Traditional AI Testing | Agentic AI Evaluation |

| Assessment Focus | Accuracy on predefined tasks | Decision-making quality and behavioral alignment |

| Methodology Base | Statistical validation, benchmark datasets | Multi-dimensional behavioral assessment, simulation environments |

| Performance Metrics | Precision, recall, F1 scores | Goal achievement, ethical alignment, adaptability scores |

| Evaluation Environment | Static test sets, controlled conditions | Dynamic simulations, real-world interaction scenarios |

| Temporal Scope | Point-in-time performance snapshots | Longitudinal behavioral consistency tracking |

| Stakeholder Involvement | Technical teams primarily | Cross-functional teams including ethics, business, and domain experts |

| Failure Analysis | Error classification and debugging | Root cause analysis of decision-making processes |

| Scalability Assessment | Computational performance metrics | Multi-agent interaction and system-level emergent behavior |

| Transparency Requirements | Model interpretability techniques | Decision rationale explanation and audit trails |

| Integration Complexity | Isolated model testing | End-to-end system evaluation with human-agent collaboration |

The difference between traditional AI testing and dynamic AI agents evaluation is the ability to interpret, infer and consider multiple aspects of the objective. Hire artificial intelligence developers to build systems that evaluate how systems behave in a dynamic, unpredictable environment, and find immediate resolutions for the same. These stakes are higher in certain industries like autonomous driving, fintech, and healthcare, where poor decisions can harm real-world and real people. Traditional AI systems are also not trained to react to situations that weren’t present in its trading module.

The Shift in Perspective: Agentic AI Assessment Approach

Agentic AI evaluation represents a complete shift in how we evaluate AI performance. It is no longer about “did the agent complete the task?”, it is trained to also ask “how did the agent make those decisions”, “were those decisions ethical?” and “was the agent able to adapt to changing conditions?”.

This is a much more comprehensive agentic AI assessment framework which is truer to how human performances are measured in esteemed organizations. It is not simply by seeing results, but also by how someone handles pressure, works in teams, and solves problems. Agentic AI system needs a similar multi-dimensional evaluation approach that considers all aspects of autonomous decision making.

What is Agentic AI Evaluation and Why is it Critical for Enterprise Success?

Agentic AI evaluation is a detailed, multi-layered framework/approach/process that provides the true evaluation of autonomous agents by checking their performance, behaviour, and trustworthiness. It doesn’t stick to the traditional benchmarks, but observes how agents make decisions, adjusts to the changes, and aligns with ethical AI frameworks and organizational priorities.

A strong agentic evaluation framework will assess whether AI agents can:

- Think through complex, multi-step problems

- Stay aligned with evolving goals and shifting contexts

- Make ethical choices under stress or uncertainty

- Collaborate with humans and other agents effectively

- Adjust strategies based on real-time feedback

- Clearly explain the reasoning behind their decisions

Let’s say you’re evaluating a customer service AI agent. Traditional methods might just look at how fast it responds or how accurate it is.

But Agentic Evaluation also asks:

Can it show empathy? Resolve conflicts? Handle private information responsibly? Escalate when needed—all while keeping the customer satisfied?

These types of evaluations use simulations, behavior analysis, and feedback from different stakeholders. Techniques borrowed from fields like psychology, decision theory, and organizational behavior help build well-rounded evaluation frameworks that truly reflect how agents will act in the real world. Get AI consulting services to help you evaluate and build your custom agentic AI model that follows a clear structure and flow.

Breaking Down the Multi-Dimensional Architecture of Agentic AI Assessment

Agentic AI frameworks include various layers such behavioral analyzers, decision auditors, and performance synthesizers. Each three of them have a specific task in hand:

- Behavioral Analyzer: Observes how the agent acts under different conditions

- Decision Auditor: Assess if the agent’s decision in in alignment with the goals

- Performance Synthesizer: Provides a full-picture evaluation

This architecture is more robust and complete than a traditional single-metric testing approach for accurate AI chatbot development. A legacy test will tell if an AI model is able to recognize a pattern, however, with agentic evaluation will question the AI model’s decision, check for organizational consistency and other Agentic AI metrics.

AI Agent Evaluation Metrics

| Agentic AI Metric Evaluation | Description |

| Task Adherence | How well the agent follows given instructions |

| Tool Call Accuracy | Precision in selecting and using the right tools |

| Intent Resolution | Ability to understand and fulfill user’s actual needs |

| Behavioral Consistency | Maintains stable behavior across similar situations |

| Goal Alignment | Actions serve intended objectives without harmful effects |

| End-to-End Latency | Total time from input to final response |

| Throughput | Number of tasks handled per unit time |

| Memory Coherence and Retrieval | Maintains context and recalls relevant information |

| Strategic Planning | Breaks down complex goals into actionable steps |

| Component Synergy Score | How well different system parts work together |

Best AI Agent Evaluation Framework and Tools

Comprehensive Evaluation Platforms

1. AgentBench

AgentBench provides a standard evaluation framework for assessing AI agent performance evaluation across different domains with behavioral assessment capabilities. It offers pre-built evaluation scenarios. Developers can customize testing environments but need considerable technical expertise for advanced implementations.

It is ideal for companies looking for systematic agent comparison and benchmarking that compares various performance dimensions.

2. HELM (Human Evaluation of Language Models)

HELM focuses on holistic evaluation of AI systems with particular strength in measuring social impact and ethical alignment. It provides standardized metrics and transparent evaluation processes but primarily targets language-based agents rather than multi-modal systems. HELM is ideal for organizations prioritizing responsible AI deployment with comprehensive bias and fairness assessment.

3. OpenAI Evals

OpenAI Evals offers an extensible framework for creating custom evaluations with strong community support and integration with popular AI models. While providing excellent flexibility for custom scenarios, it requires substantial development effort for complex evaluation designs. OpenAI Evals works well for teams comfortable with Python development who need highly customized evaluation frameworks. It also allows users to create AI agents using GPT.

AI Behavioral Assessment Tools

1. Agent Gym

Agent Gym provides multi-agent interaction assessment with proper simulation environments for testing emergent behaviors. It provides realistic interaction scenarios but requires considerable computational resources for complex multi-agent simulations.

2. PettingZoo

PettingZoo offers standardized environments for multi-agent reinforcement learning evaluation with extensive documentation and community support. While providing excellent multi-agent testing capabilities, it focuses primarily on game-theoretic scenarios rather than real-world applications.

Ethical and Safety LLM Evaluation Frameworks

1. AI Fairness 360

AI Fairness 360 (AIF360) is an open-source toolkit and LLM evaluation framework developed by IBM Research to help organizations detect and reduce biases in machine learning models. It makes AI systems fairer and more equitable, especially in critical fields like healthcare, finance, education, and human resources

2. Anthropic’s Constitutional AI

Constitutional AI provides a framework for training and evaluating AI systems according to constitutional principles with built-in ethical alignment assessment. While offering sophisticated ethical evaluation capabilities, it requires integration with specific training methodologies to achieve full benefits.

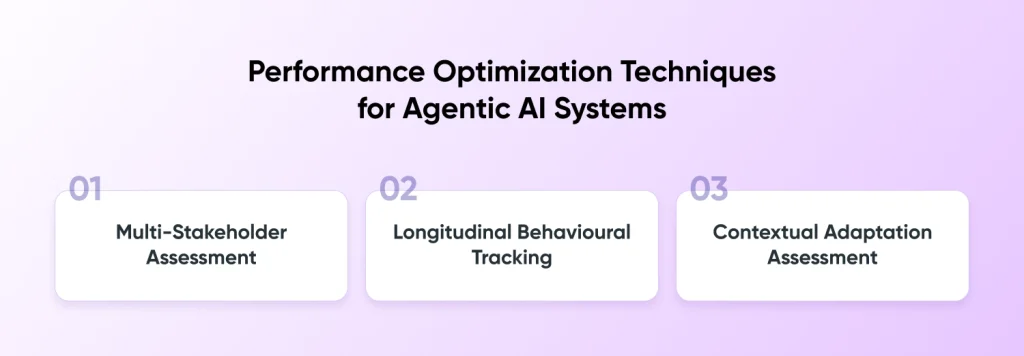

Performance Optimization Techniques for Agentic AI Systems

1. Multi-Stakeholder Assessment

Multi-stakeholder evaluation brings together different voices—your technical teams, domain experts, actual end users, and ethics specialists—to get a complete picture of how your system really performs. Think of it like getting a 360-degree review for your AI agent via machine learning development services.

This approach does require you to juggle multiple perspectives and manage different stakeholders carefully, but it uncovers insights that you’d never catch if you only asked one group.

You’ll typically run structured feedback sessions, use different evaluation criteria for different groups, and then systematically weave together all that qualitative feedback into something actionable.

2. Longitudinal Behavioral Tracking

Longitudinal evaluation is like keeping a behavioral diary for your AI agent over months or years, watching how it changes, learns, and sometimes deteriorates over time. It helps you spot when your agent starts drifting from its original behavior, catching new learning patterns, or notice performance dropping before it becomes a real problem.

The catch? You need pretty sophisticated data collection and analysis systems to pull this off effectively. Today’s best implementations use automated behavioral fingerprinting and anomaly detection that can continuously monitor your agent’s behavior patterns without human effort.

3. Contextual Adaptation Assessment

Contextual adaptation evaluation checks how well your AI agent is able to read the room and adjust its behavior when situation changes, user needs shift, or unexpected situations arise. It’s measuring your agent’s flexibility and resilience—basically, can it roll with the punches?

This approach really captures how robust your agent is, but you’ll need to create diverse testing scenarios and develop sophisticated ways to measure adaptation quality. The most effective implementations build dynamic testing environments that systematically change different contextual factors while carefully measuring how well your agent responds.

Future Direction of Agentic AI Evaluation

Emerging Evaluation Paradigms

The field of agentic AI evaluation continues evolving rapidly, with new methodologies emerging to address increasingly sophisticated agent capabilities. Future evaluation frameworks will likely incorporate real-time behavioral analysis, predictive failure detection, and automated evaluation pipeline generation.

Advanced evaluation systems are beginning to utilize meta-learning approaches that can adapt evaluation criteria based on agent behavior patterns and deployment contexts. These systems promise to reduce evaluation overhead while improving assessment accuracy and relevance.

Integration with Autonomous Systems

As agentic AI systems gain more value in important applications, evaluation frameworks need to support continuous assessment to ensure such systems perform as intended.

This means building evaluation systems that can watch your AI agent’s behavior, spot when something’s going wrong, and take action to fix it—all without disrupting your day-to-day operations. When you build these evaluation capabilities right into your autonomous systems, your team can dramatically improve how reliable and safe your AI actually is with professional AI software development services.

Final Words

Agentic AI evaluation is completely changing how we figure out if our AI systems are actually doing what we want them to do. We used to just care about whether they got the right answer. Now we’re asking the harder questions: “How did it come to that decision?”, “Will it make the same choice tomorrow?”, and “If my job depended on it, would I trust this thing to make the call?”This new way of thinking means companies can finally feel confident about letting their AI agents lose in the real world with help of a trustable generative AI solutions company. They know these systems won’t just work—they’ll work the right way, every single time it counts.

FAQs for Agentic AI Evaluation

How to Evaluate AI agents?

Test them on specific tasks, measure accuracy and success rates, and use human reviewers to assess quality of outputs. Run controlled experiments comparing different versions and monitor real-world performance metrics.

Why is Evaluating Agentic AI important?

Ensures agents perform reliably and safely before deployment, preventing costly errors or harmful behaviors. Helps identify weaknesses, build trust with users, and meet regulatory requirements for AI systems.

What Tools Support Agentic AI evaluation?

Benchmark datasets, automated testing frameworks, and simulation environments for controlled testing. Human evaluation platforms, A/B testing tools, and specialized AI software development services that track metrics and performance.

What are the Challenges in Agentic AI evaluation?

Creating realistic test scenarios that cover edge cases and unexpected situations is difficult and time-consuming. Measuring complex behaviors like reasoning, creativity, and multi-step decision-making requires subjective human judgment that’s hard to standardize.