Quick Summary: Discover how to build AI apps with .NET, using advanced tools like ML.NET, Azure AI, and Semantic Kernel. This blog reveals the power of .NET’s cross-platform flexibility, smooth deployment, and AI agent orchestration, making it the ultimate guide to transform enterprise solutions with AI.

The world of application development is undergoing a smooth transformation and AI is at the center of it. For many years, .NET has been the reliable platform for enterprise applications, but now it’s evolving into one of the most potent and smooth platforms for building next-generation AI solutions.

Suppose you’re a developer looking to move beyond simple CRUD (Create, Read, Update, Delete) applications and begin integrating advanced models, intelligent agents into your work, and sophisticated data pipelines. In that case, the .NET ecosystem is ready for you. Whether you’re building solutions internally or engaging an experienced partner such as CMARIX, a firm specializing in AI and .NET development, the framework provides the necessary foundation for success.

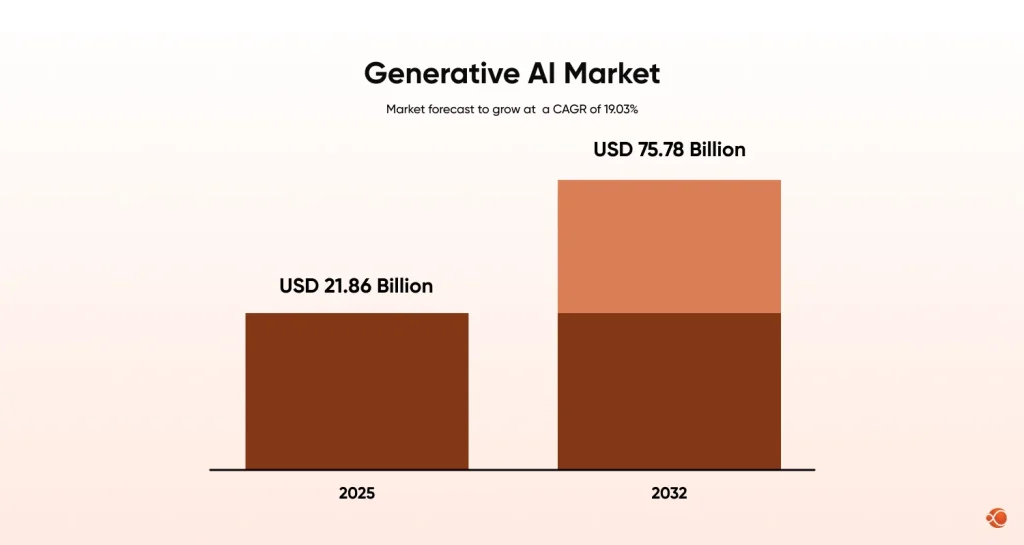

The global generative AI market is projected to increase to USD 21.86 billion by 2025 from USD 18.43 billion in 2024. And this momentum is led by a compound annual growth rate (CAGR) of 19.32%, setting the sector on course to achieve USD 75.78 billion by 2032.

This blog will walk you through the whole landscape of .NET in AI development, from choosing the right frameworks to deploying complex, scalable agentic systems. We’ll explore what it takes to build AI apps with .NET that are ready for production.

Why Choose .NET for AI Application Development?

Evolution of .NET from Traditional Apps to AI-ready Frameworks

For a long time, there was a perception that AI and ML development belonged exclusively to Python. But that narrative has fundamentally changed. .NET has matured far beyond its origins in traditional, server-side enterprise apps. It now uses a modern, open-source, and cross-platform architecture that is perfectly suited for AI workloads.

The underlying design of the .NET AI experience is extensible, modeled after a successful ASP.NET middleware and dependency injection approach. This means that the learning curve for integrating complex AI logic feels familiar to any seasoned .NET developer.

The Current Landscape of AI Tooling in .NET

The current landscape for AI for .NET developers is rich with first-party Microsoft tools and seamless integrations with open-source favorites. We’re not just talking about wrapping existing APIs; we’re talking about deep, idiomatic C# integration.

Key to this is the focus on consistency. Services like the new IChatClient can be injected and used in any .NET/Blazor/ASP.NET application out of the box, providing a standardized way to interact with various large language models (LLMs). This consistency simplifies development dramatically when you need to switch model providers or integrate local models.

For companies looking for .NET development services that include cutting-edge technology, embracing the AI-ready .NET framework is a straightforward choice that leverages existing talent and infrastructure.

Which AI Frameworks and Tools Are Essential in the .NET Stack?

To succeed when you build AI apps with .NET, whether that’s multi-agent systems or simple generative AI features, you need to understand the core toolkit. The .NET AI development ecosystem is deliberately designed to be flexible, supporting both traditional machine learning and the latest generative models.

Overview of ML.NET, Azure AI, and ONNX Runtime

- ML.NET: This is Microsoft’s dedicated, open-source machine learning framework for .NET. It simplifies machine learning development by allowing developers to integrate custom machine learning models into their applications without having to leave the .NET environment. It’s excellent for classic tasks like sentiment analysis, price prediction, and object detection.

- Azure AI: This suite of services provides powerful cloud-based AI capabilities, including pre-trained models for vision, speech, language, and the critical Azure OpenAI Service for deploying LLMs at scale.

- ONNX Runtime: The Open Neural Network Exchange (ONNX) Runtime allows developers to run high-performance, optimized machine learning models across platforms. This is key for model format interoperability and efficiency, letting you train a model in PyTorch or TensorFlow and deploy it in your .NET app with maximum speed.

Cross-platform Support: Windows, Linux, macOS, and Mobile

The strength of modern .NET is that it allows developers to build AI applications with .NET effortlessly. Your AI applications can run anywhere, from a backend server on Linux to a mobile application on iOS or Android using .NET MAUI. This cross-platform support, combined with ONNX Runtime, is essential for next-gen AI apps .NET where edge computing and local inference are becoming standard requirements.

Integrating Open and Local AI Models Efficiently

The ecosystem strongly supports integration with open-source models. For instance, integration with open-source models (like Llama through LLamaSharp) is supported, and the IChatClient service makes using them as simple as any commercial API. The system also supports multiple model backends (GitHub Models, Azure OpenAI, local LLMs) and flexible orchestration tools (like Aspire), making local development, prototyping, and scaling to production seamless.

How to Implement Foundational AI Models in .NET

The quickest way to start your journey is by connecting your .NET app to a foundational LLM. The experience is highly abstracted, clean, and intuitive thanks to the unified APIs.

Working with LLM Providers (OpenAI, Google Gemini, Azure AI)

The core principle of integrating LLM in .NET apps is abstraction. Libraries like Microsoft’s AI extensions and Semantic Kernel provide a single set of interfaces, so whether you’re calling OpenAI, using Google Gemini’s service, or hitting an Azure AI deployment, your C# code remains virtually identical. This freedom is what allows for true flexibility in your design.

Setting up Credentials and Configuration in appsettings.json

Configuration follows standard .NET best practices. API keys, endpoint URLs, and model names are typically managed through appsettings.json or secure environment variables. This keeps confidential information out of your source code and makes it easy to switch environments (development, staging, production).

Making the First API Call: Text Generation and Chat Completion

Once configured, the initial API call is straightforward. Using the injected IChatClient (or a similar service), you can send a prompt and stream or receive a full text response.

For example, a simple chat completion might look like:

C#

public async Task<string> GenerateText(string prompt, IChatClient client)

{ // The abstraction makes the call simple, regardless of the backend model var result = await client.GenerateContentAsync(prompt); return result.Content[0].Text;

}This clean structure is central to AI apps with Net and simplifies the maintenance of your AI features significantly.

Semantic Kernel in .NET: How Does It Orchestrate Prompts and Logic?

If you want to move beyond simple, single-turn API calls, you need an orchestration layer. This is where Semantic Kernel (SK) steps in.

Why Semantic Kernel? The Bridge Between Code and Prompts

Semantic Kernel is an open-source SDK that fundamentally treats the Large Language Model as an operating system kernel. It provides a programming model that allows you to seamlessly mix traditional C# code (known as Native Functions) with AI prompts (known as Semantic Functions).

Why is this important? LLMs are powerful reasoners, but they are terrible at deterministic tasks like looking up a price in a database or making a secure API call. SK acts as The Bridge Between Code and Prompts, giving the LLM the tools it needs to complete complex, multi-step goals.

Defining Native and Semantic Functions

- Semantic Functions (Prompts): These are defined as parameterized prompts (e.g., “Summarize this text in the voice of a pirate”). They handle the creative and generative part of the work.

- Native Functions (Code): These are standard C# methods that the LLM can call. They handle deterministic, non-AI tasks, such as calling an external weather API or fetching a document from a database. Together, these are packaged into “Skills” or “Plugins.”

The Kernel, Services, and Context in C#

The Kernel object is the central orchestrator.

- It is configured with various Services (the LLM providers, like OpenAI or Gemini).

- It loads the Skills (Native and Semantic Functions).

- When a user request comes in, it creates a Context, which contains the current state, memory, and any data it has retrieved.

- The Kernel then uses the LLM to plan the sequence of function calls required to fulfill the user’s intent.

This agent paradigm is central: large language models (LLMs) are treated as agents, orchestrated for tasks, and enhanced with tools, memories, and workflows. This is the foundation for building advanced, conversational AI solutions.

Beyond LLMs: How to Use ML.NET and ONNX for Local AI Models

While generative AI is exciting, many enterprise tasks still rely on more traditional machine learning. The .NET stack ensures you don’t have to switch languages to handle these hybrid workloads.

Leveraging ML.NET and ONNX for Traditional and Hybrid ML Tasks

ML.NET allows you to train models directly in C# or consume pre-trained models.

This is perfect for scenarios where you need fast, local inference for tasks like:

- Anomaly detection in real-time streaming data.

- Predictive maintenance based on sensor readings.

- Recommendation engines are embedded directly in an application’s backend.

When you integrate AI and ML with .NET applications, you create hybrid solutions where an LLM agent can use ML.NET for local classification before generating a human-readable response.

Deploying Local Models for Edge Computing and Efficiency

For applications that need to run offline or require ultra-low latency, .NET’s ability to deploy models locally via ONNX Runtime is a game-changer. This is critical for edge computing, where devices (like factory robots or mobile devices) need to make real-time decisions without constantly calling a cloud service.

Model Format Interoperability with the .NET runtime

The ONNX standard is the bridge that ensures Model Format Interoperability. By converting models trained in any major framework (PyTorch, TensorFlow) to the ONNX format, you can efficiently load and run them within the .NET runtime, providing high-speed inference without the overhead of framework-specific dependencies.

How to Manage Data for AI Workloads in .NET

AI applications, especially generative agents, are only as smart as the data they can access. Managing this data is often the most complex part of building AI apps with .NET.

Vector Databases and Embeddings

A key component of modern AI applications is the vector database. LLMs work with data in the form of embeddings (numerical representations of text). To give your AI knowledge beyond its training data, you store your private or domain-specific documents as embeddings in a vector database.

The .NET ecosystem emphasizes that vector stores and advanced search (like Qdrant, Azure AI Search) are critical for contextual retrieval and embedding storage in real-world AI apps. Libraries make it straightforward to ingest documents, create embeddings, and store them efficiently.

RAG (Retrieval Augmented Generation) Implementation

RAG is the technique that makes AI practical for businesses. It works like this:

- The user asks a question.

- The system uses the question to perform a semantic search against the vector database.

- Relevant documents are retrieved.

- The original question and the retrieved documents are packaged into a prompt and sent to the LLM.

- The LLM generates an answer based only on the provided documents.

In .NET, this RAG implementation is often handled by the Semantic Kernel, which orchestrates the retrieval (via Native Functions) and the generation (via Semantic Functions).

You may like this: Embedding Intelligence with Vector Search and RAG Models

Document Processing and Chunking Strategies

Before retrieval, documents must be processed. Large documents need to be broken down into smaller, meaningful segments; a process called chunking.

Effective .NET data pipelines for AI involve:

- Loading various document types (PDFs, HTML, DOCX).

- Cleaning the text and removing unnecessary noise.

- Applying smart chunking strategies (e.g., ensuring sentences are not broken mid-way).

Memory Management and Context Windows

The Memory management and context windows of an LLM are its short-term and long-term memory. Context windows are limited, so you must carefully manage what information is passed to the LLM with each turn of the conversation. Semantic Kernel helps by managing both short-term memory and long-term memory.

What Are The Best Practices for Designing .NET AI Agents?

The agent-centric paradigm is the future of .NET AI development. An AI agent is an LLM with goals, tools, and the ability to plan its actions. Companies hire AI engineers, who understand this technology and will help in building more advanced autonomous systems.

Agent Lifecycle: Planning, Execution, and Reflection

A well-designed .NET AI agent follows a clear lifecycle:

- Planning: The agent first receives a goal, and then LLM determines the necessary steps, including which tools(Native functions) to call.

- Execution: After planning, the agent executes the plan, calling external APIs, searching databases, or running ML models.

- Reflection: Once done with the execution part, the agent reviews the results of its execution and determines if the goal is met or if further action is needed.

Tool Calling and Function Execution

Semantic Kernel is a key enabler here. When the LLM decides to call a tool, it generates a structured function call response. Crucially, structured data responses from agents are highlighted, and parsing results into classes or JSON is handled internally by the extensions, increasing API usability and intent detection. This feature allows agents to reliably return data that your C# code can easily consume.

Implementing Memory and State Management

Agents need memory to maintain context over a conversation. This is implemented in .NET through:

- Volatile Memory: Stored in memory, useful for the immediate conversation.

- Persistent Memory: Usually stored in a database (like a vector store), allowing the agent to remember facts, user preferences, or past actions across sessions.

Advanced Agent Patterns

The Microsoft ecosystem supports advanced agentic systems. Solutions have been demonstrated utilizing distributed, scalable agentic systems utilizing .NET, React, and Python, supporting heterogeneous environments. This means your agents don’t have to exist in a vacuum; they can coordinate and work together across different technology stacks.

The introduction of the Model Context Protocol (MCP) allows distributed service/plugin discovery and innovation, and is a future-looking concept that supports complex, modular, and agentic AI workflows.

How to Deploy and Scale AI Applications Built with .NET

Getting an application to production requires a smooth deployment and scaling strategy. .NET’s existing enterprise deployment capabilities make this process highly efficient. Organizations that hire .NET developers with expertise in cloud and containerization can help you speed up the process from prototyping to a production-ready AI system.

Running AI apps in Docker and Kubernetes

Modern .NET applications are inherently container-friendly. Packaging your AI application (including any local ONNX models) into a Docker container is the standard practice. This makes deployment to container orchestration platforms like Kubernetes simple, allowing you to manage scaling and high availability with proven industry tools.

Integrating with Azure AI Services

For cloud-native deployments, integrating with Azure AI Services is the path to massive scalability. Tools like AZD (Azure Developer CLI) and Aspire orchestration, shown to streamline the process, make deploying a complex architecture (including the .NET app, an Azure OpenAI endpoint, and a vector store) a single, streamlined process.

Monitoring Performance and Optimizing Inference Efficiency

Post-deployment monitoring is important. .NET applications using tools like Application Insights for detailed telemetry. For AI, you need to monitor:

- Inference Latency: How quickly the model responds.

- Token Usage: To control costs.

- Model Performance: Using an Evaluation (Eval) toolkit that helps developers score model responses on safety, completeness, groundedness, and retrieve accuracy, useful for testing and compliance. This ensures you’re maintaining quality as you scale.

Build AI Apps with .NET: Use Cases

| AI Use Case | Key Technologies | Example Applications |

| AI-powered Chat Assistants | Semantic Kernel, Azure AI, RAG, .NET 8 | Internal knowledge management, customer support chatbots, document/receipt processing systems |

| Predictive Analytics in Enterprise Applications | ML.NET, Azure Machine Learning, .NET APIs | Supply chain forecasting, inventory prediction, and financial trend monitoring |

| Automation and Intelligent Decision-Making Tools | Semantic Kernel, Azure Logic Apps, Azure Cognitive Services | Workflow automation, manufacturing optimization, HR decision support tools |

| Automated Code Review and Developer Assistance | Semantic Kernel, Azure OpenAI, GitHub Copilot SDK, .NET Roslyn APIs | Secure code review pipelines, C# coding assistants, and test generation tools |

What’s Next for AI Development in the .NET Ecosystem?

.NET 9 and Upcoming AI-focused Features

The .NET 9 release will continue to simplify AI integration with improved performance, unified APIs, and deeper integration with orchestration tools like Aspire. The next major release, .NET 10, is scheduled for November 11, 2025, which will further enhance the platform with even more advanced features and long-term support.

Rise of Hybrid AI Workflows and Multi-Agent Frameworks

The future centers on hybrid systems that mix cloud LLMs with local Small Language Models (SLMs) and multi-agent frameworks. The .NET ecosystem is designed to handle these distributed, heterogeneous systems effectively.

Opportunities for Developers in the Next Wave of AI Apps

Demand is immense for developers skilled in generative AI integration services and machine learning development. Expertise in Semantic Kernel, RAG, and agent design will be critical.

Enhancing Safety, Governance, and Explainability

Focus will increase on non-functional requirements like AI governance, safe model responses, and decision explainability. New features, including the built-in Evaluation (Eval) toolkit, will help developers score model responses to meet regulatory and compliance standards for responsible AI.

Why Choose CMARIX for AI and .NET Development?

For companies seeking to rapidly adopt and scale their AI initiatives using the robust .NET framework, partnering with an experienced service provider like CMARIX offers significant advantages.

- Deep Expertise in .NET and AI: CMARIX has a proven history of delivering enterprise-grade .NET applications and is now positioned to integrate cutting-edge AI capabilities. This blend of traditional and next-generation skills ensures applications are both stable and intelligent.

- End-to-End Solution Provider: We offer AI consulting services that cover the entire lifecycle, from initial strategy and model selection to the implementation of complex RAG pipelines, Semantic Kernel agents, and scalable cloud deployment..

- Leveraging the Full Stack: By choosing CMARIX, you will get expertise in the entire .NET AI ecosystem, including Azure AI services, ML.NET, vector databases, and modern deployment practices like Kubernetes and Aspire. Businesses can build solutions that truly use the platform’s potential for performance and cross-platform compatibility.

Final Words

The time to build AI apps with .NET is now. The ecosystem is mature, the tools are familiar, and the platform provides the enterprise-grade stability and performance required for real-world solutions.

From the foundational ability to integrate models with a consistent IChatClient, to the advanced orchestration provided by Semantic Kernel, and the seamless deployment via Aspire and Docker, .NET stands as a first-class citizen in the world of AI.

FAQs on Gen of AI Apps with .NET

Can .NET be used for AI?

Yes, absolutely; modern .NET is a powerful, open-source, and cross-platform platform for AI, leveraging tools like ML.NET, Semantic Kernel, and deep integration with services like Azure OpenAI and Google Gemini.

How to Build AI Agents in .NET?

AI agents are built in .NET primarily using the Semantic Kernel SDK, which orchestrates the LLM as a central brain, providing it with custom tools (Native Functions) to execute complex, multi-step goals and manage memory.

How to Maintain AI Models in .NET?

Maintenance involves using standard .NET deployment practices (like Docker/Kubernetes), utilizing the ONNX Runtime for model interoperability, and leveraging the Evaluation (Eval) toolkit for continuous monitoring of model performance, safety, and accuracy.

How to Deploy an ML.NET model to Azure?

ML.NET models are often deployed to Azure by being packaged within a containerized .NET application (Docker) and deployed to services like Azure App Service or Azure Kubernetes Service, often streamlined using tools like AZD (Azure Developer CLI) or Aspire.

What is an AI Agent in the Context of .NET development?

An AI Agent is an LLM that is treated as an intelligent orchestrator with goals, memory, and the ability to plan and execute tasks by calling external tools or code (Native Functions), enabling complex, multi-step autonomous workflows.